We are seeing the first guidance to students on the use of ChatGPT and AI tools in assessment starting to emerge. Getting terminology correct on this is a little challenging, so we are going to explore this.

Much of the guidance is, for the time being at least, telling students not to use AI in assessment. We aren’t making a comment on this advice here – that is very much an academic issue, although we do think that these statements are going to be a holding position while assessment and curriculum are reviewed to reflect a world where AI is pervasive.

There are some risks in the wording of these statements that might not be immediately obvious though, so we’ll explore that specific issue in a bit more detail now.

Risks in getting the terminology wrong include:

- Inadvertently banning applications such as Microsoft Office or Grammarly.

- Inadvertently classifying the use of common features in tools such as Microsoft Office 365 as academic misconduct.

- Missing applications other than ChatGPT that use generative text that you’d wish to include.

We’ll suggest that perhaps an alternative approach might avoid some of these issues. Clarifying acceptable student behaviour rather than specifying prohibited technology might work better.

The problem lies in how embedded AI is in our everyday tools already, and how this is likely to develop over the next few months.

We’ll have a look at some phrases that might cause problems now.

Prohibiting AI and AI tools

Examples:

- “Students must not use AI tools in assessment”

- “Use of AI in assessment is prohibited”

The problem with this is that so many tools we use are AI tools, or contain large amounts of AI functionality, and this is accelerating all the time.

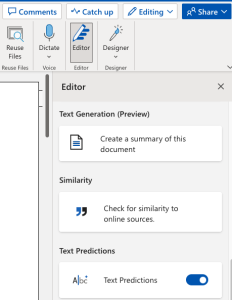

As an example, Microsoft regularly add new AI features to Microsoft Office, and many more are coming. The ‘Editor’ function includes a range of AI features, includes tone suggestions, tools to rewrite text to make it more concise, and a generative AI feature to create summaries. Microsoft talk about this as ‘everyday AI’

Grammarly is another commonly used application, which may not sound like an AI tool, but actually makes extensive use of AI.

As well as tools used in the writing of an assignment, students are likely to use many other tools which make use of AI, and again, this is increasing all the time. Academic examples of these include research tools such as Scite and Elicit.

We should also be mindful of the fact that AI tools are useful in supporting accessibility and so need to be careful not to inadvertently disadvantage particular groups. AI tools currently in use include Grammarly Premium (often used by dyslexic students) and AI-based transcription tools. Discussions on how tools such as ChatGPT might help accessibility are already underway.

Prohibiting ChatGPT

Examples:

- “Use of ChatGPT in assessment is prohibited”

It might seem tempting just to ban ChatGPT, but the core AI model behind ChatGPT has been available to developers for well over a year and is integrated into many other applications, typically marketed as writing tools. This includes tools such as Jasper, WriteSonic, Rytr and many, many more.

Even web browsers and search engines are imminently going to be using the same or similar technology to ChatGPT.

Creating a list of banned applications that cover all generative AI-based applications is an almost impossible task, and putting the responsibility on the student is problematic, as it’s very hard to tell what technology these actually use.

Prohibiting text generated by AI.

Examples:

- “Students must not submit text generated by AI”

- “Use of generative text tools is prohibited”

On the face of it, this seems simpler, but then again there are problems with the detail. As I write this in Microsoft Word, I have predictive text enabled, and AI is indeed generating some of the words that I’m writing (it just wrote the word ‘writing’ for me then!).

I make extensive use of Grammarly and often utilize it to revise sentences, improving their readability and rectifying mistakes. Yes, that last sentence was rewritten for me by Grammarly. It’s been generated by AI. This is almost certainly not the kind of use case we actually want to prohibit though.

ChatGPT excels in improving the clarity and readability of text. It’s highly likely that the features it provides for this purpose will soon be integrated into Microsoft Office. And yes, I did get ChatGPT to rephrase that for me, asking it to make it clearer – this sort of functionality is built into many writing tools already and again is not the sort of use case we are probably trying to prohibit, especially, as we noted above it’s so widely used to help with support for, for example, dyslexic students.

So AI-generated text is already part of the tools that we use every day, and these features are only going to increase. Arguably the first two examples we looked at weren’t created by ‘generative AI’ as the term is commonly used at the moment, but that’s more of an implementation detail, and something that’s going to be very hard for students to determine, as hopefully shown by the third example.

As an aside, going forward there is the very real risk that text modified/generated by everyday writing tools is likely to be flagged by AI detector tools, if we end up going down that route – almost certainly not what we want.

So what should we do?

Trying to give a technical definition of what students can do is fraught with problems.

We’ve explored the academic regulations and policies of a number of universities, and many contain guidance around proofreading, grammar-checking tools and contract cheating that could easily be adapted or applied directly to AI. These regulations and policies describe the behaviours that are unacceptable rather than trying to give a definition of technical tools that are prohibited, and this seems a better approach, especially given the pace of change in AI at the moment.

Plain language phrases along the lines of “Attempting to pass off work created by AI as your own constitutes academic misconduct” should avoid the pitfalls of trying to ban hard-to-define technology, for example as seen in this guidance from UCL.

We’re interested in feedback on this, so get in touch if you disagree with this approach or have any suggestions or additional ideas.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk

5 replies on “Considerations on wording when creating advice or policy on AI use”

I am writing this in my personal capacity, but this is very useful and extremely timely – many thanks for publishing this as we are grappling with this right now! I agree with the position that it is futile (and extremely complicated) to simply “ban” students’ use of AI, given its increasing ubiquity; likewise, approaching this from student behaviours seems sensible. The other challenge will be how (if) students can reference AI in their work (and how they distinguish between ‘everyday AI’ and other generative AI tools. I don’t think we need students referencing when they have used Word to auto-complete a word or perhaps a few words, but what about a sentence? Paragraph…?

Further, given a lot of AI tools are – or will be- “invisible” to the average user (e.g. the MS Design tools), expecting students to know when AI is being used seems unfair and unrealistic as you note, at least without good AI literacy skills being taught as part of their curriculum. Perhaps this is something JISC, working with the wider sector (and perhaps companies like MS if they wish to provide responsible AI tools to the education sector), can consider – a self-paced course?

However, as others have noted, the issue here is not really with AI, but the way we approach assessment design and the purpose of assessment as part of our approach to teaching and learning. Perhaps more fundamentally this will prompt to us to (re)consider what “learning” (and writing) means in the brave new world of AI-generated content, alongside human capacity for originality of thought and creativity. I am not saying AI is “good” or “bad” for learning and writing: but it is here, and we do need to start to understand how best we can maximise its use while being mindful of any potential limitations. That is why, personally, I think trying to ‘hide’ from AI is not the right long-term approach, but I can understand why short-term actions may focus on locking things down – as long as they are framed as short-term protections, and not the “solution” to the “problem” of AI in education. In the same way we should value and promote human creativity in learning and teaching, so too should our approach to AI be creative and imaginative.

Thanks!

We can certainly look at the area of AI literacy and courses. I agree, it would definitely be useful. We’ve also just started to look at the referencing issue. We’re just at the stage of exploring it at the moment to see if there is anything we can do to help, even if it’s just sharing good practice we come across.

I agree with you on your last comments too – hopefully that will soon be that main focus of conversation.

Make students handwrite all assignments? Then at least it would be more trouble for them to use AI because they’d have to copy the text out by hand. How will Turnitin handle cheating by the use of AI? Maybe we should reintroduce the Viva Voce,?

Thank you for sharing these points Michael. These are the conversations my colleagues are holding and we definitely need to know how other parties are approaching the situation. One thing I strongly believe is that as any other technology that has emerged through history, call it the internet, concerns arise around the topic of integrity. Were we able to ban the internet? No. As educators, we had to reimagine our teaching and learning practices. Let’s look at cell phones, same story. Access to information is each time closer and closer. Our roles as educators must be the one of mentoring our students in the process of: curating information, analyzing it, understanding it and using it properly. The viva voce that Ian mentions should be happening in our classrooms but in a more natural way were our students feel safe to share their voices and demonstrate their mastery of topics with out feeling fear of being judged if they make mistakes. If they make a mistake, they can use these innovative tools to double check and dig deeper.

I really hope that more educators and students could understand this matter and I also consider that we must inform families to help us have these conversations at home and strengthen the culture of integrity. It is a challenging task, but we need to make an effort to avoid a community that is either uninformed o misinformed.

I have been searching for an article or a guide on how to promote ethical usage of AI. I have read this this article with keen interest and found the specific examples given handy. We are trying to reword our Academic Misconduct policy and have steered clear of naming specific AI tools. We have worded it at this stage to focuss on the behaviour and rather encouranging students to quote their sources for any AI generated Content and treating it as academic miconduct if large scale use of AI in assignments demonstrates lack of original thinking on the student’s part. We use Turnitin which has released an AI similarity feature and just like the discretion required by a lecturer to differentiate between similarity and plagiarism, the advice is that where AI similarity is detected, lecturers check for sources and also use their judgement as they have been doing to decide if indeed it qualifies as pure copy-paste. At our university, the AI similarity feature or for that any matter any plagiarism detection software is never an abolute decider on it own but rather a reminder to pause and review. We also set minimal acceptable levels of similarity and again only look for those above the acceptable levels.All of these are just our best efforts and in no way the perfect solutions.

However we are spending more time now on devising a multi-pronged assessment approach where any form of submission (in person or online) is just one component. Viva Voce, Projects, Internships are some other add-ons to consider.