There has been much discussion of the role of AI writing detectors.

The issue has become more urgent for us with Turnitin announcing the imminent release of an AI detector. At the moment we’ve very little information about the Turnitin offering, other than the detail in the press release, where it claims 97% accuracy (recall) and 1% false positive, so in this post we’ll explore the issue more generally.

There’s one important point that I’ll get out of the way right at the start as this gets asked a lot:

AI detectors cannot prove conclusively that text was written by AI.

We’ll now look at three things:

- How AI detectors work

- What are we trying to detect?

- How are we going to deal with results?

How AI detectors work.

There are three main approaches to AI detection, and we’ll explore these. At the moment we have no information about which approach Turnitin are taking, but we can assume it is the second approach.

Analyzing writing style

The default output of ChatGPT has a very particular writing style. People often comment that it’s bland, overly formal and very polite. Some people claim they can spot it, although evidence seems to suggest otherwise, particularly if we don’t know the writing style of the author well. One of the most well know examples using this approach was the original version of GPTZero, which measured Perplexity and Burstiness of text.

- Perplexity: A document’s perplexity is a measurement of the randomness of the text

- Burstiness: A document’s burstiness is a measurement of the variation in perplexity

Default ChatGPT text has low perplexity and burstiness.

In practice, this approach on its own seems to be both very unreliable and very easy to defeat. It’s very easy to get ChatGPT to modify the way it writes so it no longer follows the standard pattern, and so defeats the detector.

The current version of GPTZero seems to combine this approach with a machine learning classifier, so we’ll look at this approach next.

Machine Learning Classifying

This is the approach used by OpenAI’s AI detector, and also forms part of GPTZero. Essentially, an AI model is trained on a body of text that was written by AI, and a body of text that was written by humans, with the aim of creating a classifier that can predict whether a whole document or individual sentences or portions of text are written by AI or a human.

Open AI certainly found this a challenge to do well – it had a 26% truth positive and 9% false positive rate. This obviously made it essentially useless, but it was only billed as a preview to get feedback on the idea. It might be fair to question exactly how much effort OpenAI put into this, as those figures are very low. Turnitin are citing a 97% truth positive and 1% false positive rate in lab conditions. This feels very high for a real-world situation, especially if people are actively trying to avoid detection.

Of the two classifier-based detectors available to us, both were very easily defeated by making small changes to the way ChatGPT wrote, in the same way we did for the previous example. This isn’t surprising, as by doing this, it no longer looks like the text in the training set.

Watermarking.

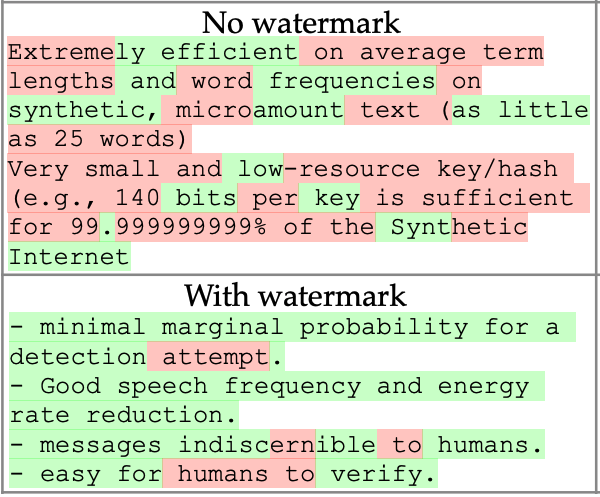

No product currently uses watermarking for text, but it is an active area of research. The approaches we’ve seen all work around the concept that AI text generators predict the next word, but don’t always pick the most obvious word. It is therefore possible to ‘watermark’ the text by choosing the next word in a way only known to the AI text generator.

In a simple example, a model may have a dictionary of 50,000 words. When it receives the prompt, it splits the possible words it could use into two groups based on an algorithm:

- one set of words that it will use (the green set)

- one set of words that it mostly won’t (the red set)

It will then generate the text using words predominantly from the green set. A human wouldn’t be able to spot this if the words were divided carefully. So, text written by the AI will mostly have words from the green set, but text written by a human will have words from both sets. The chance of a human picking text almost exclusively from the green set is very, very low, so any text that mostly contains green words is almost certainly written by AI.

(note: technically it’s tokens rather that words, which can be parts of words or punctuation, but it’s easier to understand if we talk about word!)

We can visualise this easily below:

(image from https://arxiv.org/pdf/2301.10226.pdf)

There are challenges with this approach:

- The watermarking algorithm will have to be created by the company making the AI generator, along with the tools to use it to check the text.

- It’s unknown how easy it would be to defeat, e.g. via paraphrasing tools.

- It feels like a market will just emerge for AI generators with no watermarking.

What are we trying to detect?

It’s now worth reflecting on what actually the aim of the detector is.

The concept of detecting if an entire essay was written by AI probably isn’t controversial – in this case it’s clear a student is attempting to pass off AI work as their own. This is very much an extreme example though.

AI assisted writing is almost certainly going to very much be the norm, with the first generative tool already build into Microsoft Word, and coming soon to Google’s Workplace tools. Microsoft have announced Co-pilot 365 where they say it “writes, edits, summarizes and creates right alongside people as they work”.

Let’s consider the way people are already using AI in writing (these are I things I’m happy to say I do myself):

- Generative AI to create an outline or in other ways help with structure or research. This won’t be flagged by any of the AI detectors.

- Generative AI to correct grammar and improve readability. This is almost certainly going to be flagged.

- Generative AI to help with a first draft of content as a starting point. This is almost certainly going to be flagged.

- Generative AI to create summaries etc. – this is one of the first features built into Microsoft Word, and again is likely to be flagged.

These are just a few examples, and more of these are going to emerge. So, it’s likely that almost everything we write is going to have elements of generative AI assistance, in the same way our writing is algorithmically assisted by spell checkers, and grammar checkers. Grammar checkers indeed already make a lot of use of AI, and generative AI is coming to Grammarly.

So if our assessments are to reflect real-world use of AI in writing, there are lots of grey areas in terms of what we actually want to try to detect.

How are we going to deal with the results?

Ideally, institutions would come up with clear policies and advice, along with processes to implement the policy. At this point, it would be sensible to decide whether AI detection was a useful tool, and then procure a tool that met the needs.

It looks like most institutions won’t be able to do this, as it seems Turnitin are going to make the tool available to everyone and enable it by default. We’d be happy to be corrected on this if it’s wrong.

The immediate question then is what to do with the information it provides. We don’t have an answer for this, but these are some things to consider.

- As it stands today, no detection system will be able to say that work is definitely created by AI. This is not a new situation, in that no software can prove a piece of work is written by an essay mill.

- Those that want to actually cheat, are very likely going to be able to defeat any AI detector.

- A 1% false positive rate means an enormous number of false positives. This is going to create an enormous burden if each of these cases is going to be investigated.

- The detector is likely going to flag uses of AI that most people would consider legitimate, such as when AI has been used to improve wording or correct grammar.

We previously looked at wording of policies on AI use, and this makes this even more important. Any policy banning AI is going to mean all the positives are going to have to be investigated, and this is simply not going to be sustainable.

One possible approach is to use AI detection primarily as a tool to educate students rather than for enforcement. This will need to go hand in hand with guidance on acceptable use and advice how to acknowledge the use of AI. Students could use the output from a detector to reflect on their use of AI and consider whether their use was appropriate and followed the guidelines given.

We are currently working with a group of representatives from institutions to see the best way we can help with the points raised on this post and other related issues.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk

One reply on “AI writing detectors – concepts and considerations”

The last question is key. I have seen institutions writing unenforceable policies. As pointed out, even 1% FP (which seems absurdly optimistic unless we are to have almost no TP) will be hard to manage.