About the AI team’s programme of pilots

The AI team at Jisc aims to accelerate the adoption of artificial intelligence (AI) in education. One of the ways the team is achieving this is by leading a series of pilots, allowing Jisc members to gain direct experience of implementing AI, whilst enabling the AI team to evaluate different AI products. By doing this, the AI team can share the strengths and promises of various AI products so that Jisc members have a more rounded picture of what AI tools work well, and in which circumstances.

About Graide

Manjinder Kainth, the co-founder of 6 Bit Education (the team behind Graide), and a former post-graduate teaching assistant at The University of Birmingham, had experienced both the problem of students having to wait long periods for sometimes limited feedback on their assignments, and the challenges educators face in marking large volumes of assignments.

Graide, a digital assessment platform, was developed to address these problems by reducing educator workload around assessment whilst enhancing feedback for students. As well as streamlining assessment – the platform allows work to be set by educators, completed by students, and then marked and returned all in one place – Graide adds value by using AI to recommend marks and constructive feedback on students’ work.

A key principle underpinning Graide is that there are similarities in parts of students’ answers, which means that educators will often repeat themselves when giving feedback to assignments completed by a large cohort of students. By analysing which parts of students’ answers receive particular pieces of feedback, Graide can recommend feedback to a marker. For instance, for students who make the same or similar errors when simplifying a particular expression, Graide will be able to pick up that markers tend to give the same piece feedback, and thus start recommending that feedback for students who have made the same mistakes. By assisting the marker in this way, the platform can reduce the time it takes to give feedback, whilst also making it sustainable to give more in-depth feedback.

Piloting Graide in Higher Education

The AI team wanted to find out how much demand there was for AI assessments tools in the HE sector, and to understand whether Graide could meet such demands.

The resultant pilot, which was launched in March 2022 and closed in January 2023, was conducted in two parts. In stage one, 9 universities were introduced to Graide and its use cases, and were then interviewed by the AI team to discover the extent to which the platform could solve the problems they faced.

For stage two, five universities were selected to test Graide for themselves. Each institution approached this second phase in different ways. One university, for instance, preferred to focus on using a demo Graide account, which allowed them to mark large numbers of pre-loaded maths assignments and thus reflect on how well the AI recommendations eased this process. Another university opted to set up an optional practice assessment for students, using Graide to set, collect, mark and return students’ responses. Meanwhile, another university uploaded student assignments from previous cohorts to the platform, and then used Graide to mark these assignments, thus gaining insight into how useful Graide would be when marking current students’ work. Other institutions used some combination of these approaches.

After the universities had used Graide directly, the Jisc AI team conducted evaluation workshops, which has enabled a rounded picture of Graide’s strengths and promises for the future.

Key insights from the pilot

Stage one

Stage one of the pilot – which measured the need for Graide – indicated that there was strong demand from the sector for technologies that supported both formative and summative assessments.

The consensus from this initial stage was that the product seemed promising. Some universities noted that they had greater need for tools that supported peer assessment or integrity, though generally the application of AI for assisting marking and feedback was deemed potentially valuable.

In terms of the subjects and assessment types for which Graide could be used, participants in stage one were more confident that Graide would be well-suited for mathematical topics rather than topics that required longer, written answers.

Stage two

Staff feedback on Graide’s Strengths:

- The interface was engaging, intuitive, and easy to use with minimal support

- The AI was effective at giving feedback for mathematical questions where there were a small number of ways to solve a problem, and where common mistakes were made by users

- Even without the AI recommendations, the system’s design could save educators time as it enables them to drag and drop pieces of feedback they have previously given. This also improved consistency of feedback.

- If educators changed their mind about the feedback that should be given to particular parts of students’ work, changing the feedback once would automatically make changes for all other students who had received that piece of feedback. This also helped to improve consistency of feedback.

- Support from 6 Bit Education (the team behind Graide) was very good

Staff feedback on Graide’s areas for development:

- For mathematical questions that could be approached in many different ways, and where students could make a wide variety of errors, Graide could usually only provide recommendations after a larger number of questions had already been marked (sometimes around 100). This means that Graide could still be useful for marking such questions from very large cohorts (100+), but there were concerns around its usefulness if used with smaller cohorts of students.

- A small number of bugs in the system were identified during the pilot, though 6 Bit Education were able to quickly address these.

- Sometimes educators wanted to give highly individualized feedback to students, however, these pieces of feedback often made it harder for the system to recommend similar feedback to other students with confidence. It was hence suggested that features could be developed that allow educators to alternate between giving more individualized feedback and more standardized feedback. The team at Graide are currently working to develop such a feature.

- The AI recommendations for Graide only worked when students’ work was digitally typed, as opposed to hand-written. This meant that Graide’s effectiveness could have been reduced for universities/courses where students submitted written work to be marked. Please see the summary (section 5) for Graide’s planned product developments that could address this.

- There was less confidence that Graide could be used for less mathematical subjects, and for assignments that encouraged originality of thought. In the latter case, this can be understood in terms of Graide’s ability to find patterns in the feedback given to parts of students’ work. If there are fewer similarities between students’ work, there are fewer patterns for Graide to learn. Please see the summary (section 5) for Graide’s planned product developments that could address this.

Student feedback

As part of the pilot, the team at Graide conducted a survey with a sample of students who had used the platform directly.

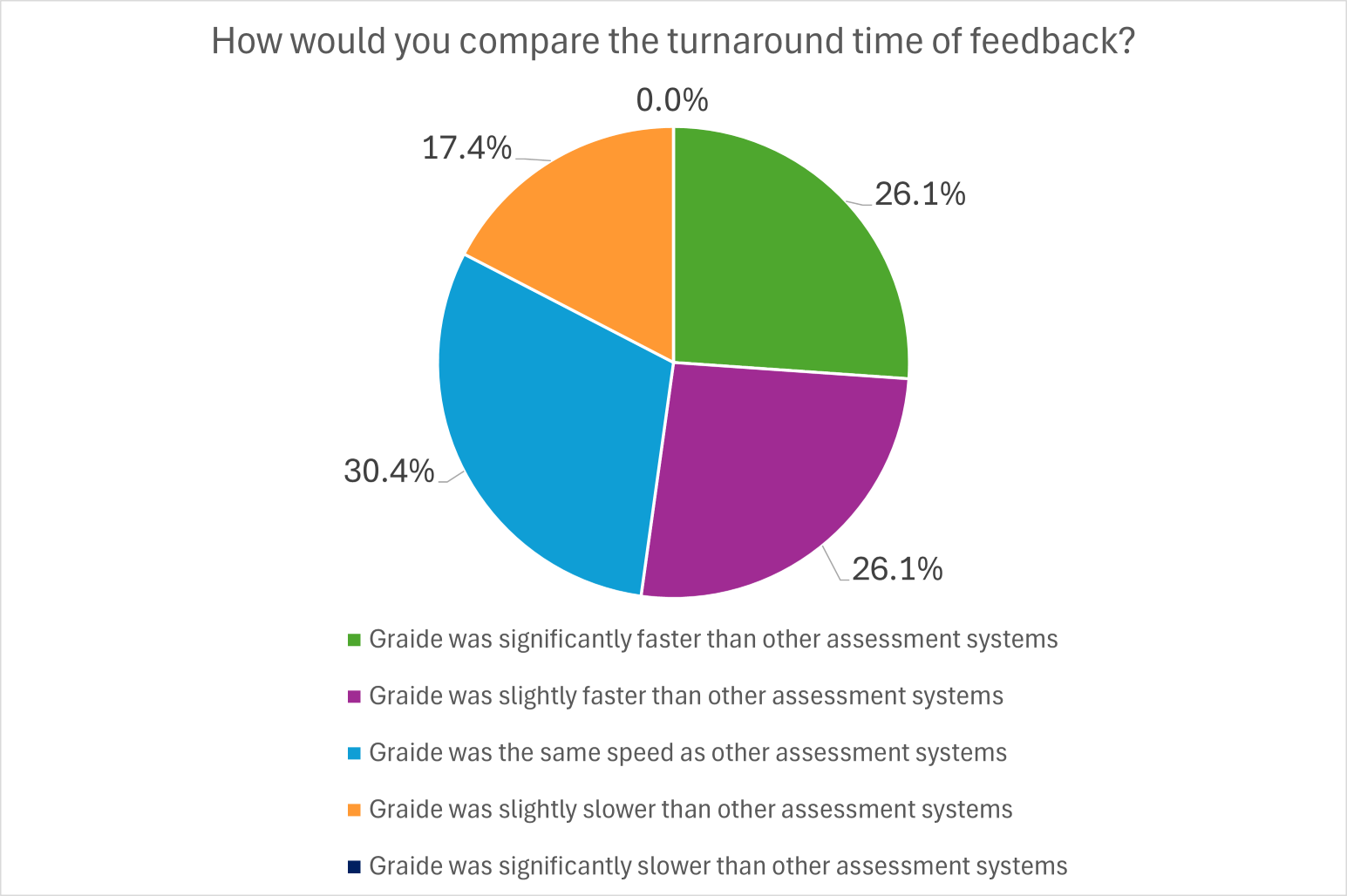

The charts below capture a number of the key insights from these surveys.

Chart 1 – 23 students’ views on turnaround time of feedback.

Chart 1 – 23 students’ views on turnaround time of feedback.

The chart shows that:

- 26.1% of students felt Graide was significantly faster than other assessment systems

- 26.1% of students felt Graide was slightly faster than other assessment systems

- 30.4% of students felt Graide was the same speed as other assessment systems

- 17.4% of students felt Graide was slightly slower than other assessment systems

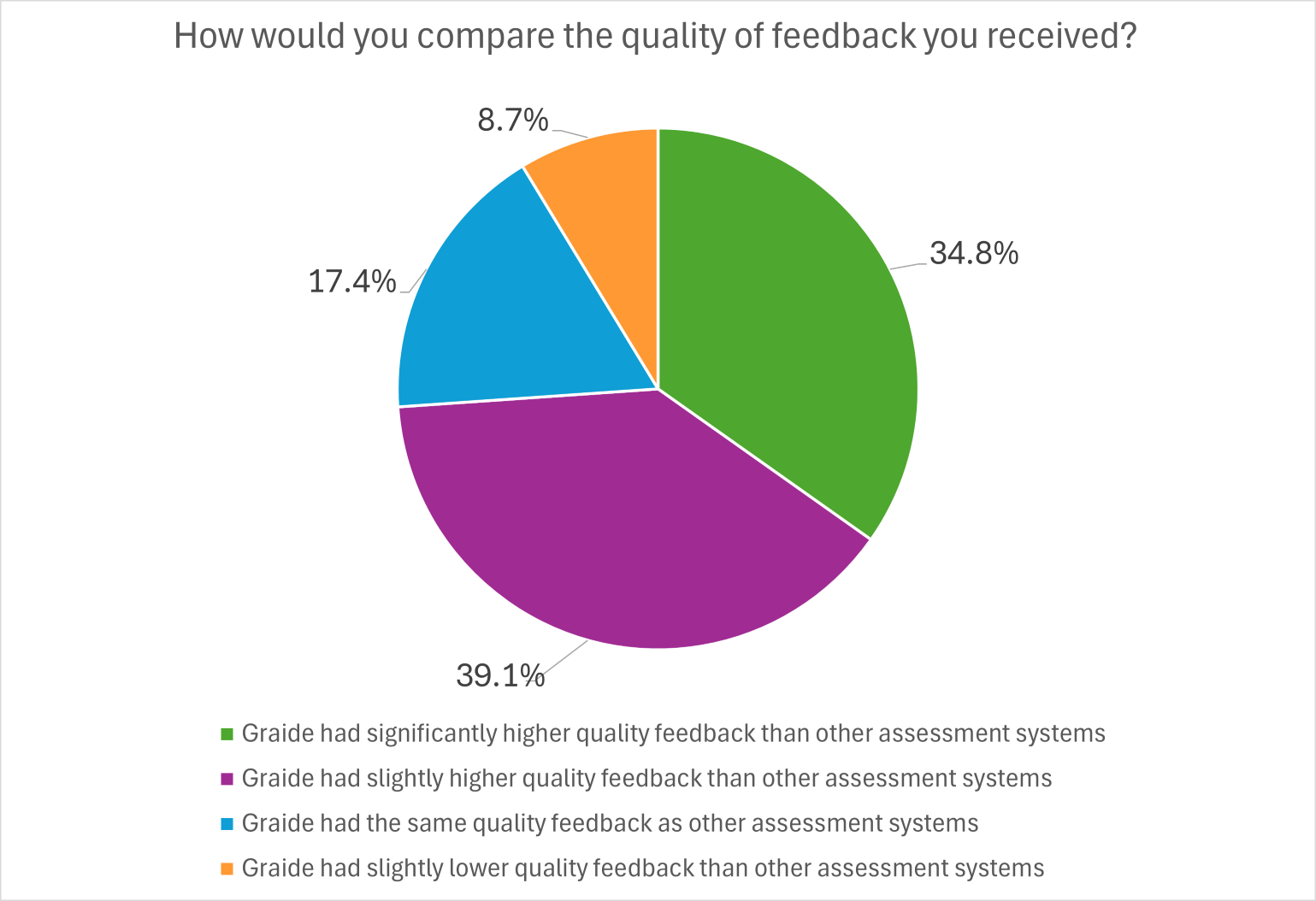

Chart 2 – 23 students’ views on quality of feedback

The chart shows that:

- 34.8% of students felt Graide had significantly higher quality feedback than other assessment systems

- 39.1% of students felt Graide had slightly higher quality feedback than other assessment systems

- 17.4% of students felt Graide had the same quality feedback as other assessment systems

- 8.7% of students felt Graide had slightly lower quality feedback than other assessment systems

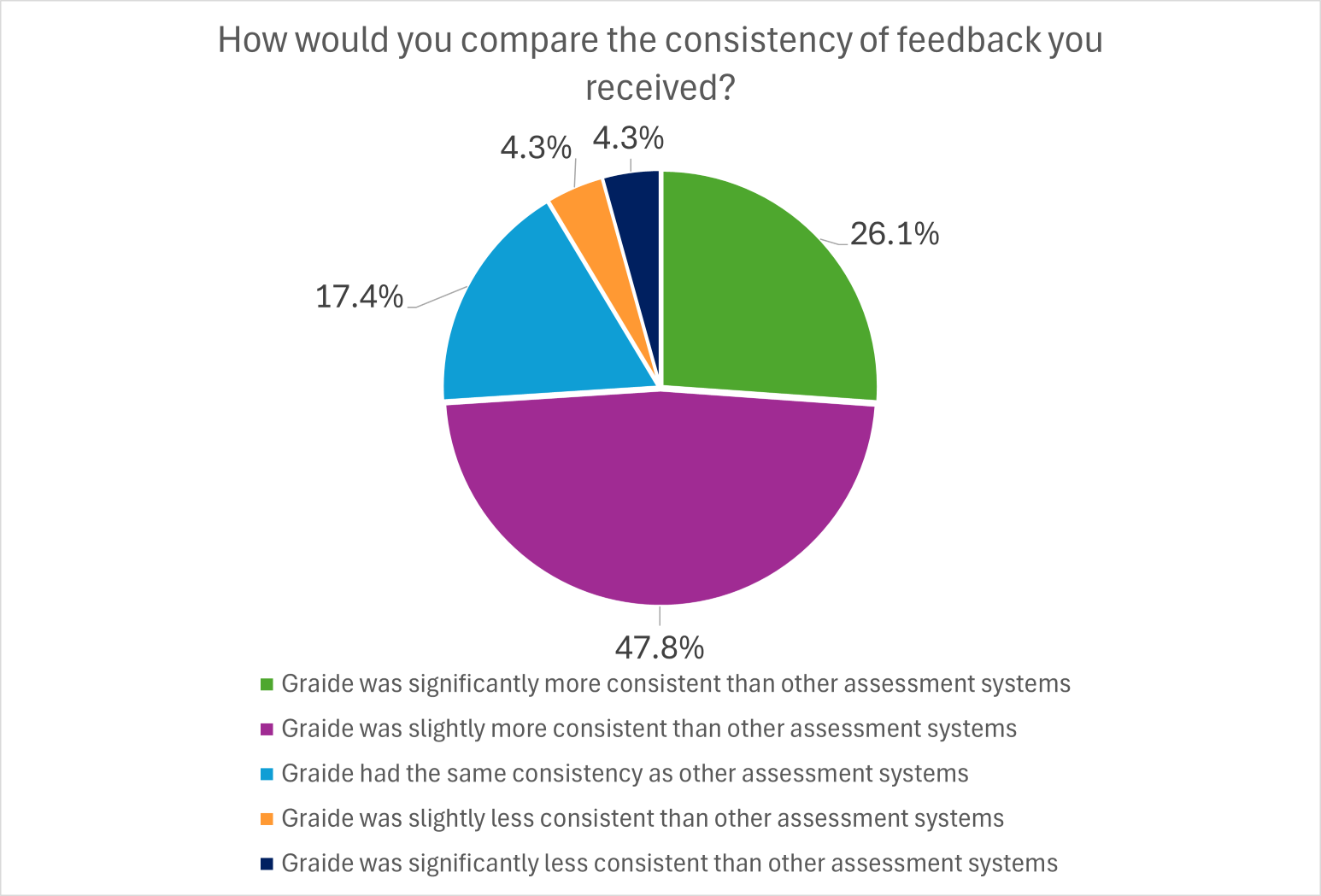

Chart 3 – 23 students’ views on consistency of feedback

Chart 3 – 23 students’ views on consistency of feedback

The chart shows that:

- 26.1% of students felt that Graide was significantly more consistent than other assessment systems

- 47.8% of students felt that Graide was slightly more consistent than other assessment systems

- 17.4% of students felt that Graide had the same consistency as other assessment systems

- 4.3% of students felt Graide was slightly less consistent than other assessment systems

- 4.3% of students felt Graide was significantly less consistent than other assessment systems

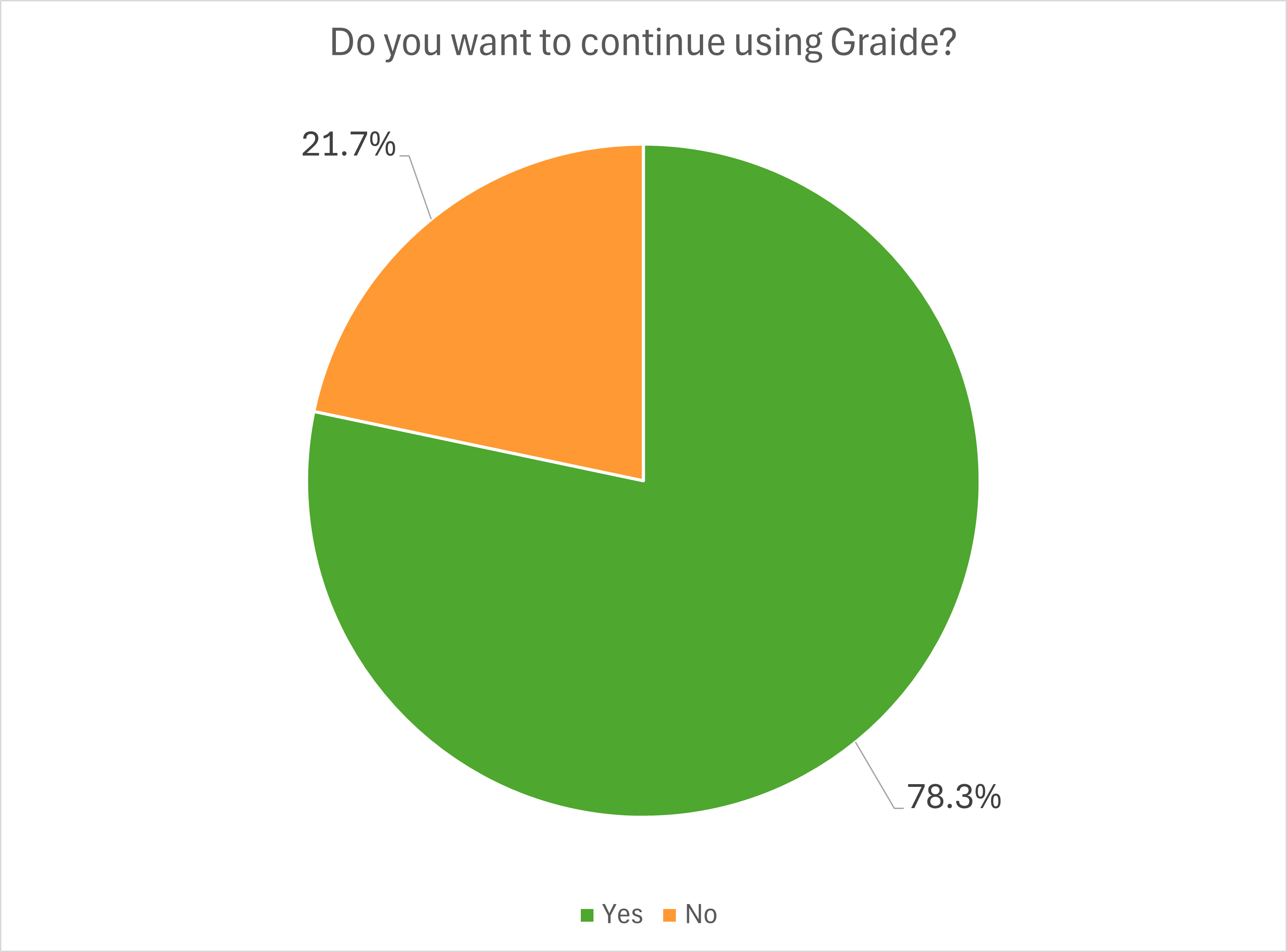

Chart 4 – 23 student preferences for continuing with Graide

Chart 4 – 23 student preferences for continuing with Graide

The chart shows that 78.3% of students wanted to continue using Graide, compared to 21.7% of people who didn’t.

Summary

Overall, the AI team has established that Graide is a promising product that has the potential to solve widespread problems in higher education around assessment.

For cases where the following are true:

- universities set mathematically focused assignments/assessments to large cohorts of students

- a strong degree of similarity is expected between students’ answers

- students’ responses are digitally typed

Graide has demonstrated that it has the capabilities to enhance the assessment process for both students and educators.

The AI team has also learned that new features are in the pipeline, which could expand the contexts in which Graide can be effectively used.

- Natural Language Processing features have been developed that enable Graide to identify where students have expressed similar ideas despite using different words. This could enable Graide’s effectiveness for mathematically expressed responses to be extended to written responses.

- Optical Character Recognition features are being developed, which could enable Graide to treat hand-written responses in the same way that digitally typed responses are currently treated. This would mean that educators could possibly mark uploaded handwritten work with the support of AI assisted recommendations.

These developments increase confidence in Graide as a strong and effective product.

Next steps

Jisc and the universities that participated in the pilot had a positive experience working with Graide and 6 Bit Education (the organisation that developed Graide).

If you are interested in using Graide or considering using AI to mark mathematical assignments, then we recommend getting in touch with Graide to explore more: contact@graide.co.uk

If you have any general questions or suggestions around the uses of AI for marking assignments, please get in touch with the AI team at: ai@jisc.ac.uk

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk