On Tuesday 28th November we held our first AI in FE discussion clinic, with just over 50 members from around the UK joining us online to discuss issues around AI safety in FE.

AI Safety in FE

The first half of our session was led by Senior AI Specialist, Paddy Shepperd, who gave a thorough overview of current discussions around AI safety and key considerations for responsible AI use.

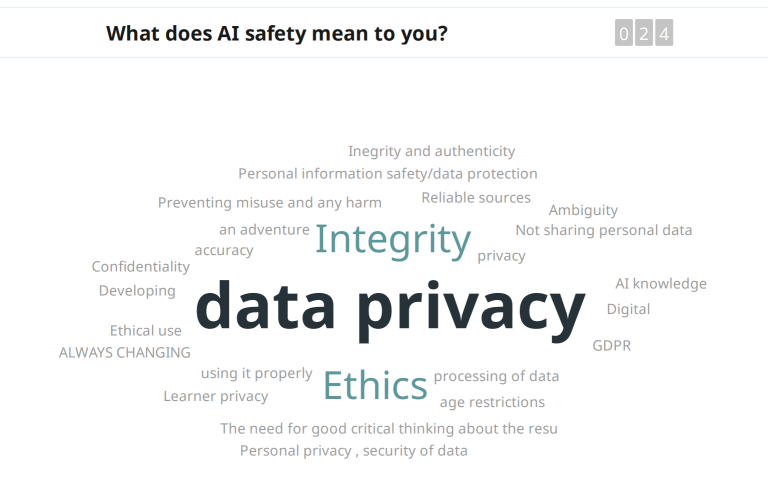

Beginning with the benefits and limitations of AI tools for education, we looked at what AI safety means to the community. We then explored AI safety from a student’s perspective regarding terms of use and risks around data privacy and security. The session then moved on to discussions around the potential for generative AI to be used to create inappropriate content including convincing scam emails and deepfakes of actual people. We also took this opportunity to review where members of the community felt they were in our maturity model. Encouragingly, members largely hadn’t encountered any AI safety issues yet in their practice but there was an acknowledgement that as the uptake of AI tools increases, issues are more likely to emerge and so there is a need to be aware and prepared.

The key aim was for us to think about and review strategies to prioritise student safety, by evaluating content for accuracy, monitoring terms of service, and fact-checking AI output for responsible AI use.

Discussing the key questions

In the latter half of the session, we split into smaller breakout groups to discuss the unanswered questions around AI safety and what resources and knowledge we might need to address these.

Key areas of discussion emerged:

Assessment & AI detection

Assessment was a primary area of concern and sparked a discussion around changing assessment methods in the age of generative AI. Suggestions included incorporating more oral assessment formats, allowing students to demonstrate their understanding via discussion and focusing less on written work.

The issues around AI detection were also raised and there was a general acknowledgement that detection tools are not reliable enough to accurately detect AI-generated content in student’s work, further that the danger of false positives from these systems could be a safety issue itself.

Further guidance around AI from qualification bodies was identified as a key resource needed to help address these issues.

Student use

From the discussion around assessment, conversation turned to considering what constitutes acceptable student use of AI tools.

There was acknowledgement that students should declare any use of AI, but a concern that it is not clear what amount of AI use is acceptable yet or in what circumstances. This can create a difficult situation wherein it is hard to advise students on what is acceptable and so they may not feel comfortable declaring AI use at all.

Staff knowledge of AI

Throughout the session members also emphasised the continued importance of upskilling staff on AI. It was agreed that further awareness of the capabilities of AI tools and their limitations is essential to cultivating safer use of AI. It was noted though that it is a considerable challenge to engage all staff in this upskilling, and staff may be reluctant to engage with AI for a wide variety of reasons.

Overall, it’s clear that there are a lot of ongoing questions around AI safety and more work to be done to answer them. These discussions will continue to shape our work in the Jisc AI Team and we expect AI safety will be a regular topic to revisit in upcoming discussion clinics.

The next AI in FE session will be held on 30th January 2024, please register here to take part – https://www.jisc.ac.uk/training/artificial-intelligence-in-further-education-discussion-clinic

We are keen for members to shape future AI in FE sessions, if you would like to raise a particular theme or topic for discussion, deliver a lightning talk or lead a discussion activity at a future meetup please get in touch with us at ai@jisc.ac.uk.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at ai@jisc.ac.uk