On Tuesday 27th February, we had our third community meeting. We had a great group who joined us for an insightful discussion around how to provide learners with guidance around AI.

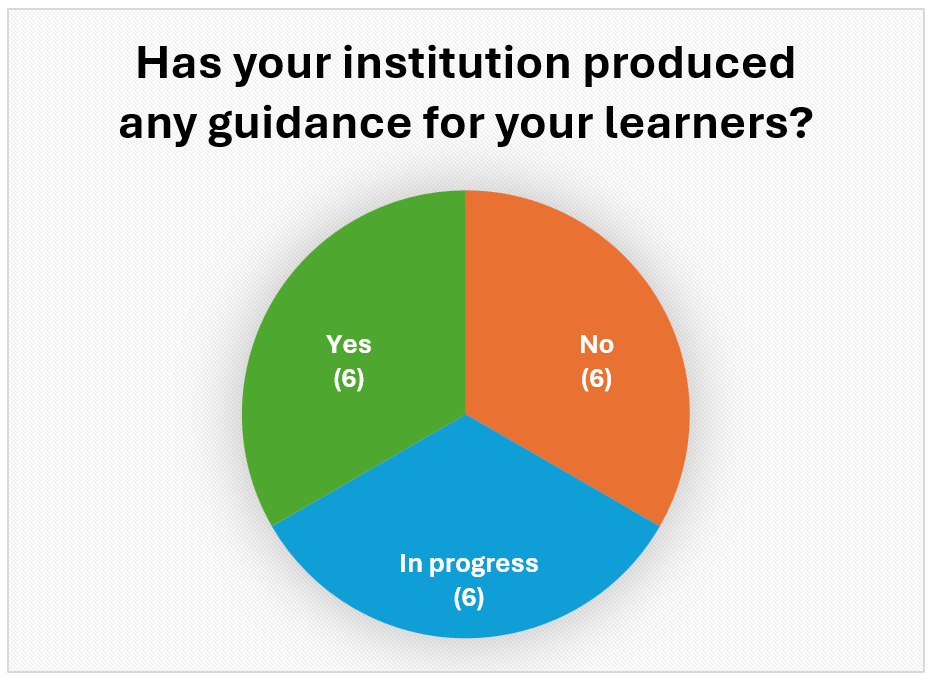

The first half of our session was led by AI Technologist, James Hodgkinson, who began with a poll, asking whether their institutions had produced guidance for their learners already:

Interestingly, from those who responded, there was an equal split among responses of ‘Yes’, ‘No’, and ‘In progress’, showing that members were at varying stages of implementing guidance for learners.

James then provided a detailed summary of our Learner Guidance for FE blog, which covers key themes including how to use AI in college, the limitations of AI, guidelines around referencing, as well as AI safety and data privacy concerns. This set the stage for our subsequent breakout rooms where participants were split into smaller groups to discuss the guidance and in particular, the following questions:

- Would this approach work for your learners?

- What else would you like to see included?

- Is there anything that needs more detail?

- Is there anything you think should be removed, or that you disagree with?

Feedback around our Learner Guidance

We then reconvened to share the highlights from the group discussions. Overall, there was a lot of positivity around the guidance with a couple of members mentioning that they would use it to reflect on their existing learner guidance drafts. They thought it captured the benefits of AI well, while also addressing important considerations to be cautious of.

We received some excellent suggestions for improvement, which is exactly what we were looking for. Members highlighted that an infographic would be useful in order to make the information more accessible and digestible for learners. Ideally, this could be something institutions could display on their classroom walls, but it was also noted that having something mobile friendly and easily accessible via social media would be required. It was agreed that exploring alternative methods was necessary to engage learners. This could involve leveraging platforms like TikTok or developing animated content to disseminate educational material and deliver bite-sized, impactful guidance.

Other considerations included transforming the guidance into tutorial programmes, integrating them into slide presentations for broader accessibility and engagement. Additionally, members proposed we include a comprehensive FAQs section that addresses common questions and concerns. This idea, previously unexplored, sparked significant interest and is now something we plan to include as we further develop the guidance.

Referencing

The importance of effective referencing was emphasised, with members asking for clear instructions on how to reference AI technologies and other sources accurately in academic work.

Some recommend using tools like Cite Them Right alongside the guidance to promote proper citation practices.

Everyone agreed that this is a particularly complex topic, acknowledging the challenge of aligning with assessment and awarding bodies’ guidance. Specifically, JCQ’s updated guidelines were highlighted as an important reference to consider.

Continued discussion

Wider discussion on learner guidance proved to be particularly interesting. Members noted that as AI becomes increasingly integrated into everyday tools, learners may encounter challenges in identifying what constitutes as AI, and on top of this, it was mentioned that students may be aware of certain tools that staff are not. Furthermore, concerns were raised about the difficulty of keeping both staff and students up to date with the rapid pace of technological changes.

Finally, an interesting point was made around avoiding the inclusion of specific examples, such as tool names like Copilot or Gemini in guidelines. This approach aims to minimise the need for frequent updates, considering the fast-changing nature of AI. Instead, they proposed referring to them as ‘GenAI tools’, thereby keeping that flexibility in the guidelines.

Thank you to all who attended and engaged in the session, we hope to see you all again in our next meetup on March 26th 12.30-1.30pm where we will be delving into discussion on assessment.

Register here for March’s meetup.

We are keen for members to shape future AI in FE sessions, if you would like to raise a particular theme or topic for discussion, deliver a lightning talk or lead a discussion activity at a future meetup please send us an email to ai@jisc.ac.uk.

Sign up for Digifest here – we’d love to see you there!

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk