I spoke at a couple of events last year where I reflected on the trends, successes, and failures of the previous 12 months. As part of this, I briefly looked at some of the main LLMs and how they had developed over the previous 12 months. I focused on the models most of us are more likely to use—by OpenAI, Meta, Anthropic, and Google—and noted three main trends:

a) A continued drive to create the most powerful model

b) A trend towards also providing more efficient models, either as an alternative or, as in the case of ChatGPT, their main model

c) The beginning of ‘reasoning models’ that could work step by step through problems

We’re only three months into 2025, and there have already been a lot of developments since I delivered those sessions. The announcements are coming thick and fast, and it’s quite hard to keep up.

As an aside, I’ve used the term LLMs quite loosely here. The tools we use (ChatGPT, Copilot, Gemini etc.) have the LLM at the core, but add features or combine AI techniques. This has been a trend for a while, and it’s increasingly difficult—at least from a user perspective—to separate what’s part of a base model, what’s fine-tuning, what’s clever prompting, and what’s just extra features added to the UI.

The key trends I’m seeing this year are:

a) The introduction of ‘Deep Research’ tools

b) Huge changes in image generation and video

c) A continued battle to be the ‘biggest and best’ model

As well as this, we are of course seeing new entries into the race, particularly from China with DeepSeek.

I’m not going to go into any of these in depth in this post but just cover each briefly and give links on what to read if you want to find out more.

The introduction of ‘Deep Research’ tools

Deep Research, like ‘Deep Thinking’ etc., is a bit of an unfortunate name given how it overstates the ability, but we’re stuck with it for now. This is a feature in a few of the main tools, including ChatGPT, Gemini, and Perplexity, where you ask the tool to research a topic. It won’t answer straight away. Instead, it will spend some time coming up with a research strategy, then searching the web, refining, etc., and creating a report of the findings, often a few minutes later.

If you want to try this, I’d recommend Perplexity, as you can try 3 searches a day for free, and it’s nice and transparent. Here’s an example, related to my next trend – understanding why AI image generators get the text wrong so often.

First, in Perplexity, select ‘Deep Research’ in the text box. I’ve asked a vague question about image generation text and AI.

It’s transparent in its approach, so we can see it starts with a vague search on some image sites.

This seems to give it enough information to understand it’s related to diffusion models, so it then looks at some much more relevant sites for info on this.

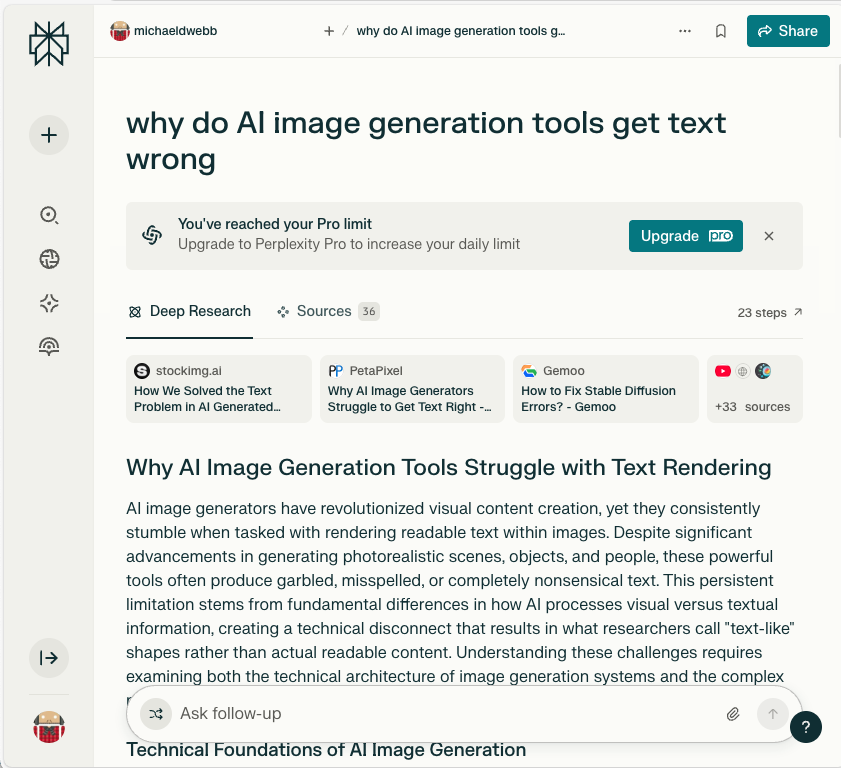

It carries on in this vein, in this instance for 23 steps, before giving me a final report.

How useful is this? For understanding a topic it’s great. Obviously it doesn’t always select the best sources, and nobody would really call it deep research, but it’s a useful tool and well worth exporting.

Huge changes in image generation and video

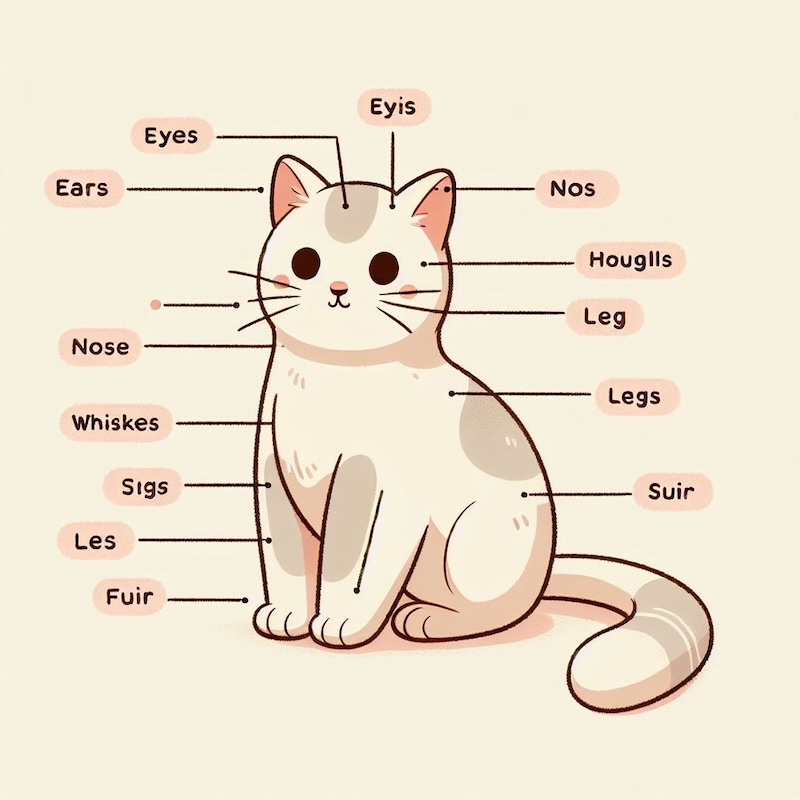

The lack of ability of AI to generate accurate text has been the basis of many a meme or joke. Here’s an image I generated with Copilot this morning, asking it to create me a labelled picture of a cat

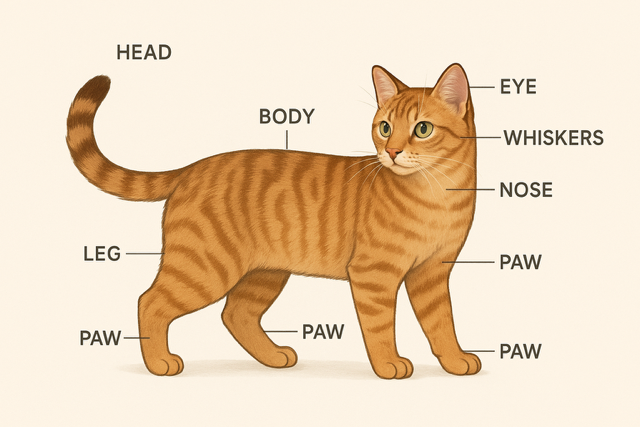

The text is typical ‘AI text’. If I do the same thing with ChatGPT (Paid) I now get this:

Note the crystal-clear text. Also note the slight failure to correctly identify the bits of the cat! This is part of GPT-4o’s new image generation model. There are quite a few other features as part of this, noted in the article I’ve linked to.

What’s happening here? There’s been a huge change behind the scenes, and OpenAI are now using an autoregression model rather than a diffusion model.

What does this mean? Until now, most AI image generators have worked by starting with a completely scrambled image — like static on a TV screen — and gradually making it clearer step by step. This is known as a diffusion process.

Autoregressive models work differently. Instead of starting with a blurry mess, they build the image piece by piece, a bit like putting together a jigsaw puzzle — or the way language models write one word at a time.

It’s not just text that’s improved, the process gives a lot more control over the whole image. We’re already seeing some amazing examples on social media.

A continued battle to be the ‘biggest and best’ model.

There’s no let-up in the battle to be the biggest and best model. Social media tends to focus on speculating about what the next big OpenAI model (GPT5) might be like, but actually a lot if incremental changes happen all the time. Open AI have released GPT4.5, Anthropic have released Claude 3 and Google have released Gemini 2.5.

Each of the release notes show a range of data and stats as to why their model is the best, but perhaps as a user the improvements aren’t that obvious. But taken over time they are. GPT-3.5, not even 3 years old, and the basis of the original ChatGPT seems ridiculously primitive now.

What else?

I’ve picked three trends to note here, but there’s a lot more happening.

Agentic AI continues to progress, allowing AI to do tasks for us. This includes OpenAI’s operator, only available in the US, and their more basic scheduled tasks, available to all paid operators.

Interactive features continue to develop. Claude has long had a ‘canvas’ feature where it would run code and you’d see the results visually. Gemini now has this too, along with new collaboration features. Ask Gemini to code a webpage and it will render the code and let you see the results.

And Claude can now finally search the web. This is probably part of a trend where the features of all these tools are starting to converge.

There’s an awful lot more happening around audio and video, but I’ll look at these another time. In the meantime, if you just have time to try one new thing, give Perplexity’s deep research features a go.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

Join our AI in Education communities to stay up to date and engage with other members.

Get in touch with the team directly at AI@jisc.ac.uk