Navigating the impacts of generative AI on assessment remains a complex and evolving challenge. In discussing these issues it’s been recommended to encourage students to document the creation of their work. Saving conversation logs with generative AI tools or enabling tracked changes within their documents in order to help them demonstrate their use, or lack thereof, of generative AI tools.

Grammarly, in response, have developed their new Authorship feature which aims to track the source of text as its being written. Providing an automatic Authorship report to the writer which can evidence how they have built their document.

Grammarly have a thorough walkthrough on how to access the beta release of this new feature which you can try out in Google docs currently. We have explored the beta and wanted to discuss how it differs from AI detection, as well as thinking of how it might be best utilised considering current issues around generative AI use and assessment.

Authorship – not a form of AI detection

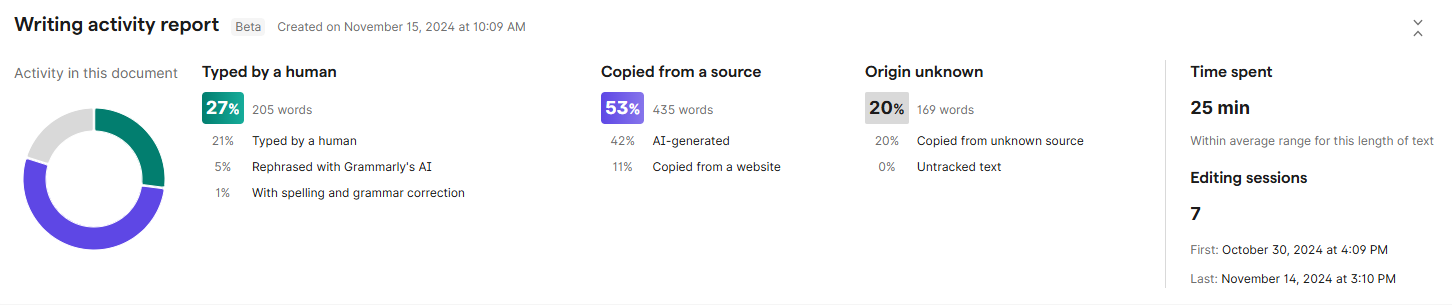

Grammarly’s Authorship report provides a ‘writing activity’ section which shows time spent on the document, number of editing sessions, and percentages showing the sources of the text. This includes text typed directly into the document, any amendments made using Grammarly’s own features, and text copied from recognised and unknown sources. The text of the document appears below this and is highlighted with the source category. Finally, the ‘Authoring replay’ allows the viewer to watch the building of the document in full, with options to skip through at a faster pace.

A key point to emphasise is that Grammarly’s Authorship feature does not utilise any ‘traditional’ AI detection technology. It does not analyse the contents of the text, words or structure to try and identify whether it was written by AI. Authorship is all about the source of the information not its contents.

How are sources identified?

When users enable Authorship they can consent to Grammarly having access to their clipboard. This allows the tool to identify which websites the user is copying and pasting text from. If they don’t consent to this they can still run Authorship reports, but all pasted in text will be categorised as from an unknown source.

For some tools including ChatGPT the linked source will also take the writer back to their conversation log with the tool.

As Authorship is categorising based on where we copy our text from it is quite easy to manipulate the recognised source of text and change the report. For instance, instead of copying and pasting our text directly from our ChatGPT tab we can copy it from ChatGPT into a word document, and then copy that into our Google doc. This changes the source recognised from ‘AI-generated’ to ‘unknown source’.

If we really want to make it look like our own writing we can also just type the text manually into the Google Doc which is recognised as ‘Typed by a human’.

However, this is where other features of the report come in to create a holistic view showing how the document was created. If we were to generate text with ChatGPT then go to the effort of hand typing it back into the Google doc, the Authorship report would still have the total time, number of editing sessions and replay function which would demonstrate the unusual way the document was constructed.

How might Authorship be used?

Authorship itself isn’t an AI detection tool however we expect many may be wondering whether it presents a viable alternative to AI detection software.

Foremostly we don’t see AI detection as a constructive method for managing student use of AI and have elaborated on the key considerations of AI detection before. As Authorship doesn’t utilise this technology it does avoid some key ethical issues, for instance cases of false positives and potential bias against none native English writers.

Authorship though doesn’t present much of a parallel to the current role of AI detectors, both in the way it works and in how it can be implemented. The Authorship report is enabled, accessed and shared by the individual user so it is in the control of the student. Unlike AI detection software which typically is run on student’s work after submission in a process they have no visibility of.

Grammarly have indicated that there may be further functionality in Authorship for education license holders in the future. They have emphasised though that they are positioning Authorship foremostly as a ‘trust and transparency’ tool, and at this time giving control of the feature to students is intentional.

Authorship could perhaps sit well though in supporting students with acknowledging and demonstrating their use of generative AI. This could prove particularly helpful for those redesigning assessment to incorporate the use of generative AI tools. Authorship could help students to both reflect on their use of generative AI and easily demonstrate how they’ve utilised different tools to their assessor.

From a student’s perspective, there may be significant appeal to having the Authorship report available to demonstrate their writing process if they were challenged on the integrity of their work. Feedback during our student forums found that some students have felt unable to challenge decisions made around generative AI and academic integrity issues. We’re aware too that this has been a particularly strong issue for disabled and neurodivergent students who have had access to some supportive AI tools, including Grammarly itself, questioned due to their generative AI features.

This raises some key questions for the use of tools like Authorship, particularly if they were to become more embedded in assessment processes. For example, are we comfortable with students feeling they must use, and potentially pay for, these kinds of tools to prove the integrity of their work?

Try it out

For now you can try the Grammarly Authorship beta for free by using the Grammarly browser extension with a Google doc. In a pre-release webinar Grammarly had indicated that in the future free users may have more limited access to the Authorship feature so it’s a good time to test it for yourself and see how it works in practice.

We will be keen to see how the feature works in its full release and across the desktop and mobile implementations of Grammarly too. In the meantime we are interested in how this feature is being received and what the AI in education community sees as the potential for it, we welcome comments below this blog and discussion at our AI in education community sessions.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk