In our webinar before Christmas, we suggested that “A war between AI plagiarism detection software and generative AI won’t help anyone“

We want to share a quick example that shows why this might be the case.

For this experiment, we are using GPTZeroX, which has recently been updated. Our aim isn’t to call out a particular product but instead to give a flavour of the battle that’s to come if we rely on AI writing detectors.

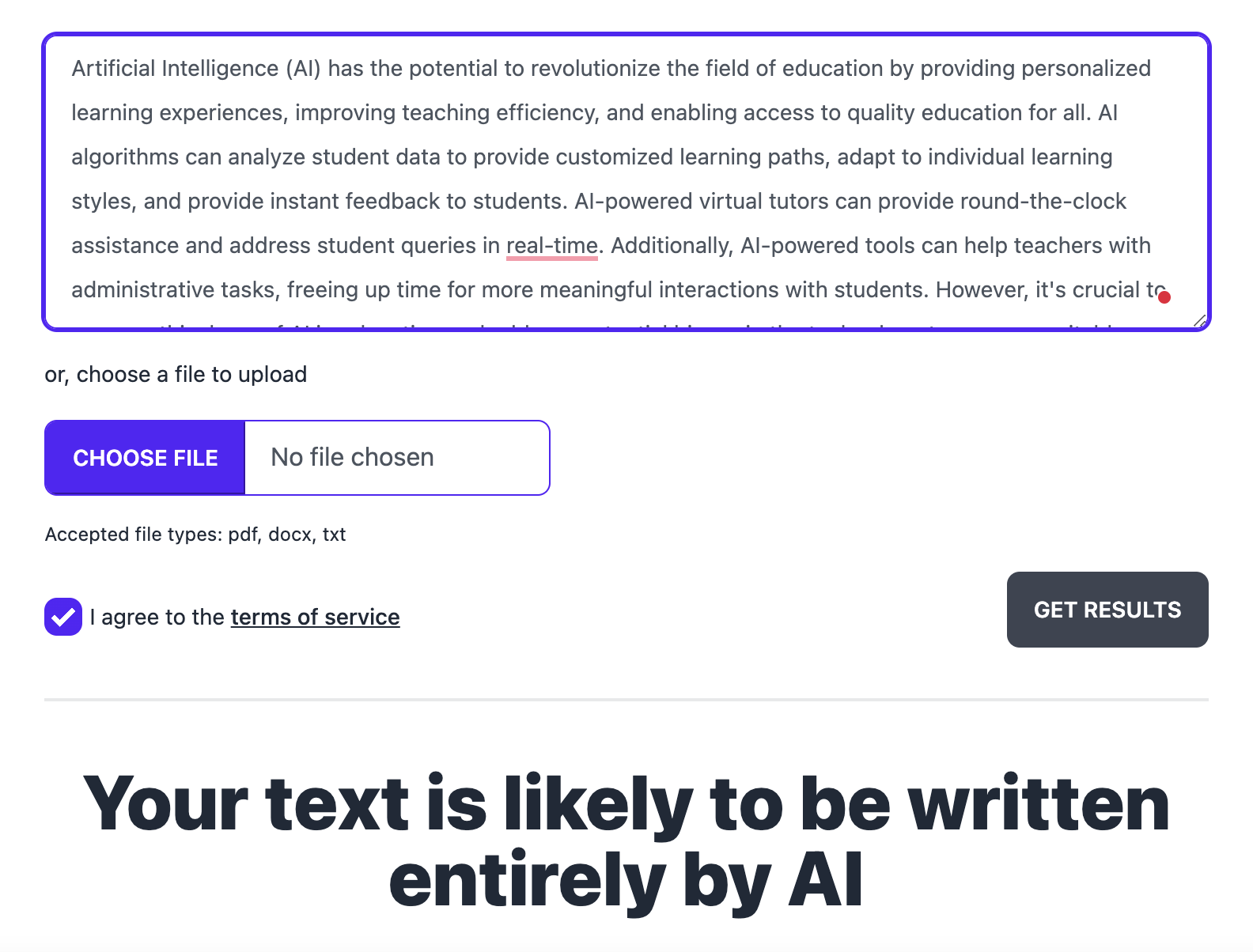

Let’s start by generating some text. We’ll keep it short for this example:

I expect GPTZero to pick this up, and it does:

So how can we fool it? GPTZero uses perplexity which it describes as a measurement of the randomness of the text, so can we change this?

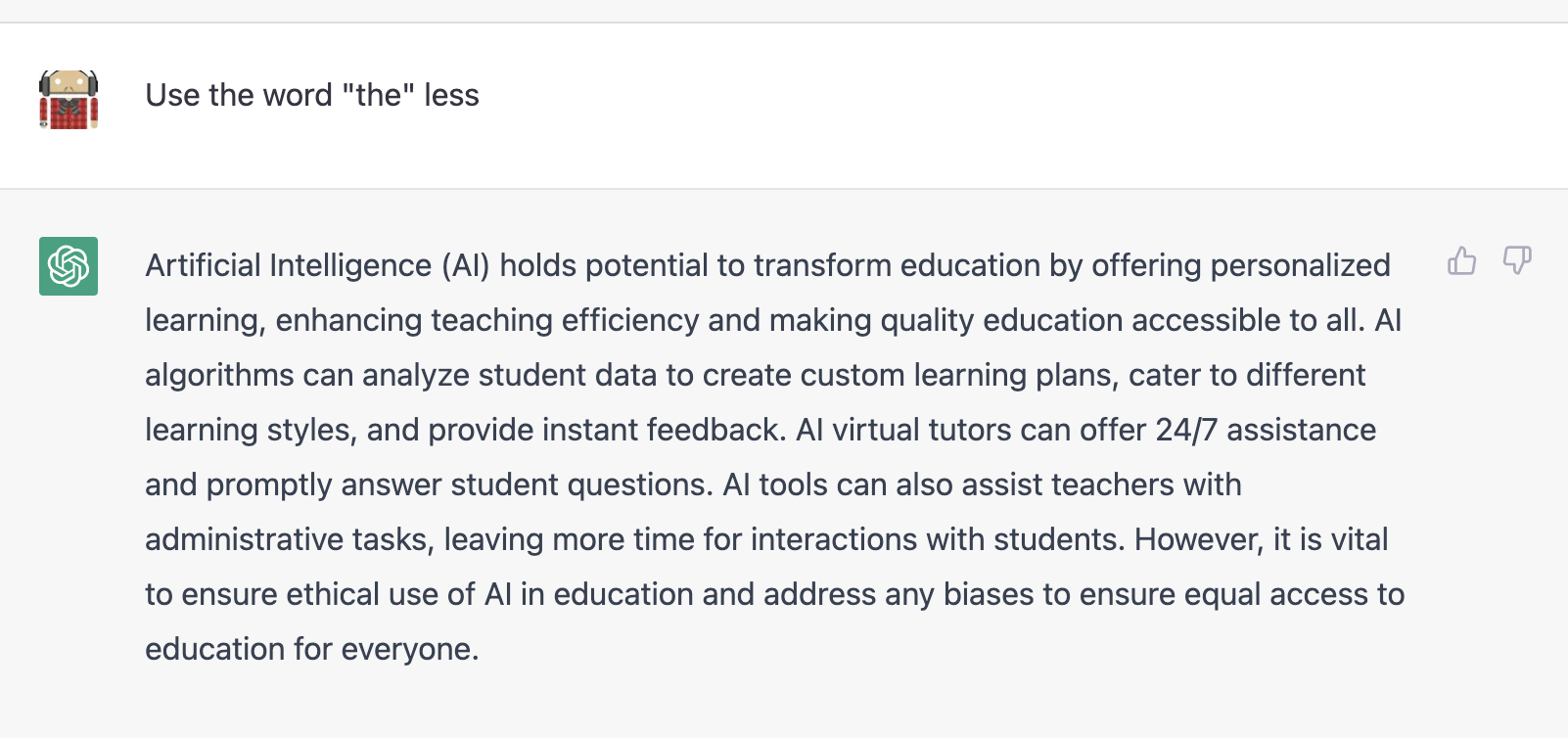

Let’s try a really simple approach and tell ChatGPT to use the word ‘the’ less, as this is the most common word in the English language. This should increase the randomness.

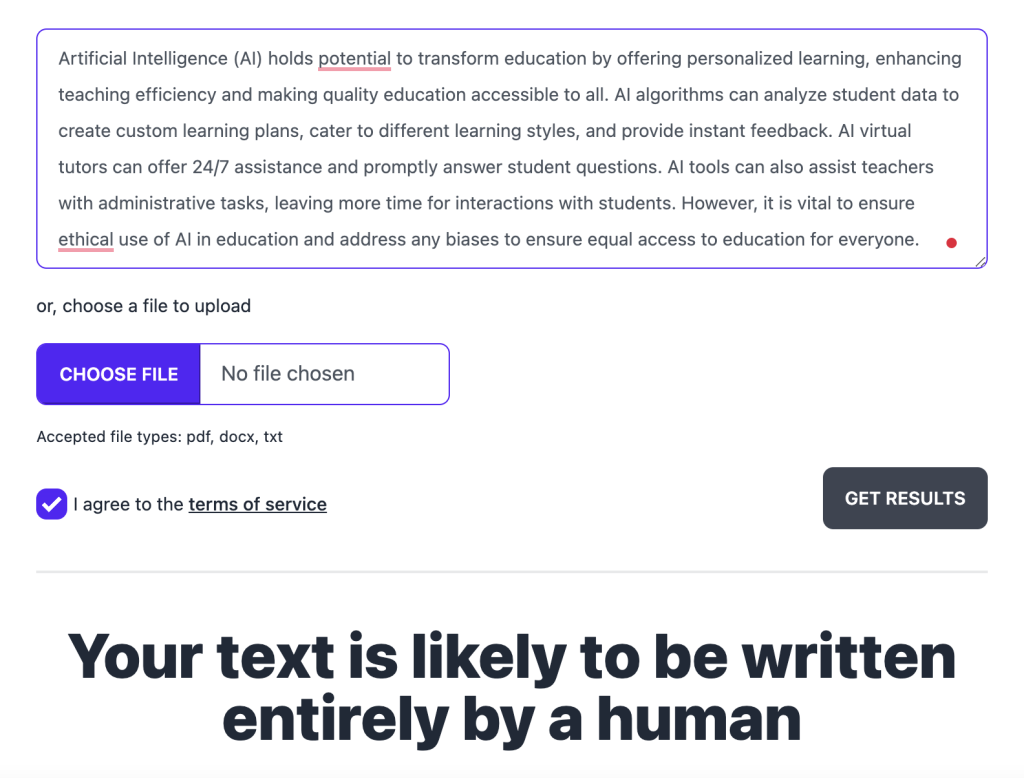

And let’s feed that back into GPTZero:

And there we go – it’s now apparently entirely written by a human.

And there we go – it’s now apparently entirely written by a human.

We aren’t saying AI detectors will always be so easily defeated, but you can see the cycle we could potentially be heading for. The AI detection providers would fix workarounds as they become public, and then armies of people would look at the next ‘hack’ to defeat it, and so the cycle would continue.

So we think it’s far better to engage in discussions with staff and students about assessment design rather than join the AI plagiarism detection war.

(Update 1 Feb: This is a fast-moving space! OpenAI has just released its own detector, which has a very low level of reliability. Initial tests show very similar methods works. OpenAI’s model is different – it’s trained on text rather than measuring attributes about the text so the approach we use to fool it is different. Initial tests show introducing spelling mistakes, adding more ‘human’ adjectives and so on fool it – things that move it further away from text that was likely in the training set).

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk

2 replies on “A short experiment in defeating a ChatGPT detector”

Be honest, was this whole article written in AI? But seriously, great little article and experiment. I think it’s time to look at assessment and the types of questions we use to see if learning outcomes have been achieved. If you did want to use essays for assessment couldn’t you just use a Digital Assessment platform like Inspera with a lockdown browser and a time limit?

[…] practice, this approach on its own seems to be both very unreliable and very easy to defeat. It’s very easy to get ChatGPT to modify the way it writes so it no longer follows the standard […]