Following on from our Learner Guidance for FE we’ve had lots of interest in the best way to provide advice to staff across all FE institutions. In this blog post, we’ve created an example of staff advice for comment and feedback.

- Would this approach work for your institutions?

- What else would you like to see included?

- Is there anything you think should be removed, or that you disagree with?

Table of Contents

2. Using Generative AI in Educational Settings

2.1 Ethical Use and Best Practices

4. Limitations of generative AI

5. Understanding AI System Risks

1. Artificial Intelligence

1.1 Generative AI

A generative AI system can generate new outputs such as text, images, videos, audio, or code based on provided instructions. These are often referred to as generative AI systems. Inputs to these systems can range from user prompts such as questions or commands to training data, which inform the AI’s learning process. The AI’s responses or creations are the outputs.

Examples include:

- Chatbots like ChatGPT and Google Gemini are based on large language models to produce textual outputs, and some of these tools can both read and create images.

- Everyday tools with integrated AI functionalities like Office 365 and Grammarly, including systems like Microsoft Copilot.

- Content creation platforms such as Gamma and TeacherMatic.

1.2 Other forms of AI

It’s important to recognise that not all AI systems are generative. Some AI technologies focus on tasks like grouping or classifying data and analysing it to make decisions. Some examples of traditional AI tools are voice assistants like Siri or Alexa, recommendation engines on Netflix or Amazon, or Google’s search algorithm. These systems do not generate new outputs and, therefore, are categorised differently from generative AI systems.

2. Using Generative AI in Educational Settings

In educational settings, AI can automate administrative tasks, support information gathering, and offer personalised learning experiences. It is important we use these tools responsibly and safely, but also feel confident to take full advantage of the services they offer.

2.1 Ethical Use and Best Practices

When using generative AI tools in your institution, it’s crucial to maintain a balance between technological assistance and human interaction, especially in areas like mentorship, where understanding personal motivations and guiding learners through challenges are distinctly human attributes. Below we have included some best practices:

- Complementing Human Interaction: AI tools should be used to enhance, not replace, the human elements in education, such as empathy, understanding, and personal mentorship. They should support teachers and learners by reducing workload and providing personalised learning experiences without diminishing the value of direct human engagement.

- Pedagogical Alignment: AI tools need to be aligned with pedagogical goals and learning outcomes. This means ensuring that the technology supports the curriculum in meaningful ways, enhancing learning objectives rather than dictating them.

- Inclusivity and Accessibility: Content generated by these AI tools should be designed and implemented with inclusivity in mind, ensuring that all learners, regardless of their backgrounds or abilities, can benefit from the technology. This involves creating accessible content and interfaces that accommodate diverse learners.

- Professional Development: Staff should engage in ongoing training and support in using AI technologies. This ensures that staff are not only comfortable using these tools but also understand the ethical implications and pedagogical strategies associated with their use.

3. Use Cases for AI Systems

There are numerous ways in which AI systems can support staff in their work. Below are some specific use cases where AI systems prove beneficial for both educational and support staff.

| Use Case | Description | Things to Consider |

| Course Content Creation | Staff can input details like course topics, learning goals, and content length into AI tools to generate custom teaching materials such as syllabi and lesson plans. | – Accuracy of AI in interpreting details

– Safeguards for content relevance and quality |

| Generation of Assessment Items | Staff can produce a range of questions aligned with learning targets and content areas, ensuring varied complexity and adherence to grammatical standards. | – Alignment with learning objectives and difficulty levels

– Ensuring question uniqueness and fairness |

| Making learning resources accessible to all | Staff can use AI to adjust learning material to make it suitable for their all learners, for example supporting dyslexia or ASD. | – Ensure you review the adjustments to make sure they are suitable for your learners. |

| Summarisation of Academic Texts | Staff can use AI tools to summarise complex texts, enhancing comprehension and saving time. | – Capturing essential points without losing critical details

– Handling of various text complexities and lengths |

| Translation of Educational Content | Staff can use generative AI for translating teaching materials, expanding access for learners from diverse linguistic backgrounds and promoting inclusivity. | – Accuracy and cultural sensitivity of translations

– Mechanisms for reviewing and correcting errors |

| Creation of Visual Aids and Presentations | Staff can convert textual information into visual presentations and materials to aid in explaining complex concepts. | – Effectiveness of text-to-visual translation

– Quality control measures for accuracy and appropriateness of visuals |

| Streamlining Administrative Tasks | Staff can automate routine administrative tasks such as scheduling, email responses, and document management to focus on higher-priority tasks. | – Effectiveness of AI tools in reducing workload

– Balance of automation and human oversight |

| Planning Educational Trips and Itineraries | Assists in creating detailed itineraries for educational trips, considering travel time, destinations, and educational objectives. | – Tailoring to educational goals and logistics

– Safety, accessibility, and inclusivity considerations |

| Writing and Managing Social Media Posts | Uses generative AI to produce content for social media platforms, aiding in communication and outreach efforts. | – Maintaining consistency of the institution’s voice and message

– Oversight to ensure appropriateness and accuracy of content |

4. Limitations of generative AI:

AI-generated outputs can sometimes reflect biases or inaccuracies due to their vast and varied training data. It is essential to verify the reliability and accuracy of these outputs. This involves cross-checking information, considering the context of the generated output, and assessing the potential for bias.

Bias and Ethical Concerns: Generative AI systems are trained on large datasets that may contain biased information, leading to outputs that reflect these biases. Staff must critically assess AI-generated content for potential biases and ensure it aligns with ethical standards and the diverse backgrounds of learners.

Accuracy and Reliability: While AI can generate content rapidly, the accuracy of this content can vary. Inaccuracies may arise from outdated information, incomplete data analysis, or misinterpretation of the input prompts. Educators should verify the information generated by AI against credible sources before use and encourage learners to adopt a critical approach to AI-generated content.

Misapplication of AI Tools: One of the subtle yet significant risks associated with the use of generative AI in educational settings is the misapplication of AI tools for tasks they are not optimised or intended for. For instance, employing generative AI systems like ChatGPT to detect AI-generated content or rely on them for complex calculations can lead to inefficiencies and inaccuracies. These systems, while advanced, have their limitations and are not designed to critically evaluate their outputs or perform highly specialised tasks outside their training parameters.

5. Understanding AI System Risks

When using generative AI, it’s essential to understand the risks to ensure safe usage and prevent potential issues.

Intellectual Property Concerns

Generative AI models are often trained on large datasets of existing content, which may include copyrighted material. If an AI model incorporates substantial elements from copyrighted works into its output, there’s a risk of copyright infringement. Even if the AI generates content that is not an exact replica of any single copyrighted work, it could still be considered infringing if it draws heavily from copyrighted sources.

AI tool providers are coming under increased scrutiny for the use of copyright protected works such as training material. Publishers are concerned about their materials being part of data sets to train systems and are currently seeking to address this issue through new licensing models. The law is adapting to address these concerns and protect rights holders, but no concrete solutions have emerged.

Best Practices for Staff:

Use only approved AI Systems: Rely on institutionally approved AI tools that include safeguards against generating copyrighted material.

Deep Fakes and Misinformation

Deep fakes are highly realistic and convincing digital manipulations of audio and video created using AI. They can make it appear that individuals are saying or doing things they never did. Deep fake technology introduces substantial privacy and misinformation challenges by enabling the creation of convincingly realistic fake audio and visual content.

Best Practices for Staff: To combat the challenges posed by deep fakes and misinformation, educational institutions should:

- Promote Awareness and Education: Initiate comprehensive digital literacy programmes aimed at educating learners and staff on the nature and risks of deep fake technology, fostering the ability to critically assess and respond to potential misinformation.

- Implement Verification Protocols: Establish protocols for verifying the authenticity of digital content related to the institution, particularly when such content could impact the privacy and reputation of the community members.

Confidentiality and Data Integrity

The integrity of institutional data and the confidentiality of sensitive information are at risk when AI systems process or store such data. Continuous updates to AI models with user inputs can inadvertently incorporate confidential information into their training datasets.

Best Practices for Staff

Strictly Control AI Data Inputs: Carefully manage and monitor the information fed into AI systems, avoiding the input of confidential or sensitive data.

6. AI Systems

Approved/Recommended AI Systems:

Note: This should be a list defined by your institution

| Approved AI Systems | |

| Microsoft Copilot | |

| Cont.. |

Unapproved AI Systems

| Unapproved AI Systems | |

| Read.ai | |

| Cont.. |

Setting Up Your Accounts:

When configuring your AI tools, prioritise privacy and control:

- Disable automatic data sharing.

- Ensure manual activation of AI functionalities.

ChatGPT

Below are the steps you need to take to turn off data sharing on ChatGPT

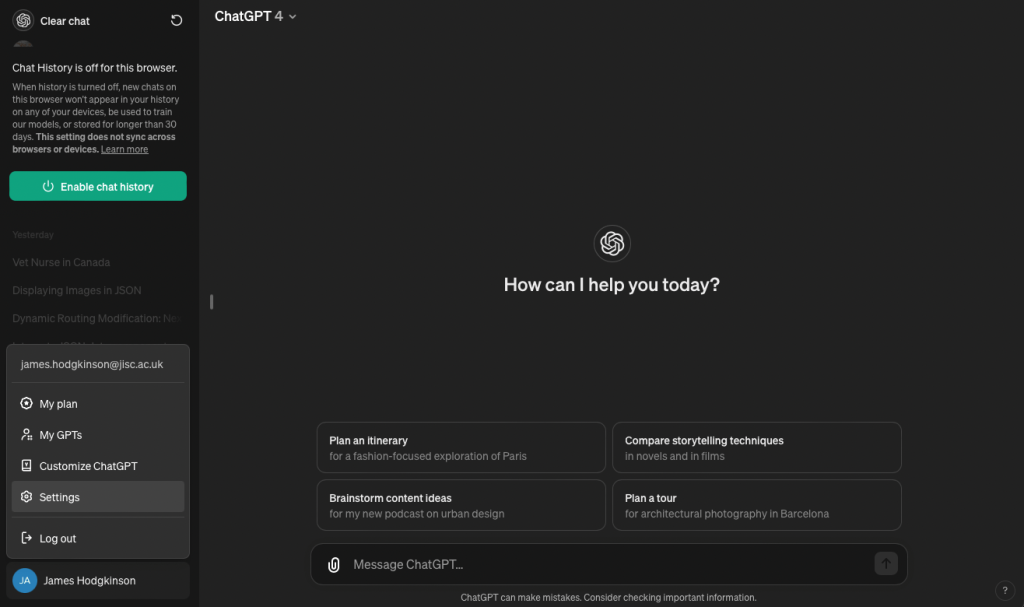

First, click your username in the bottom left and click settings.

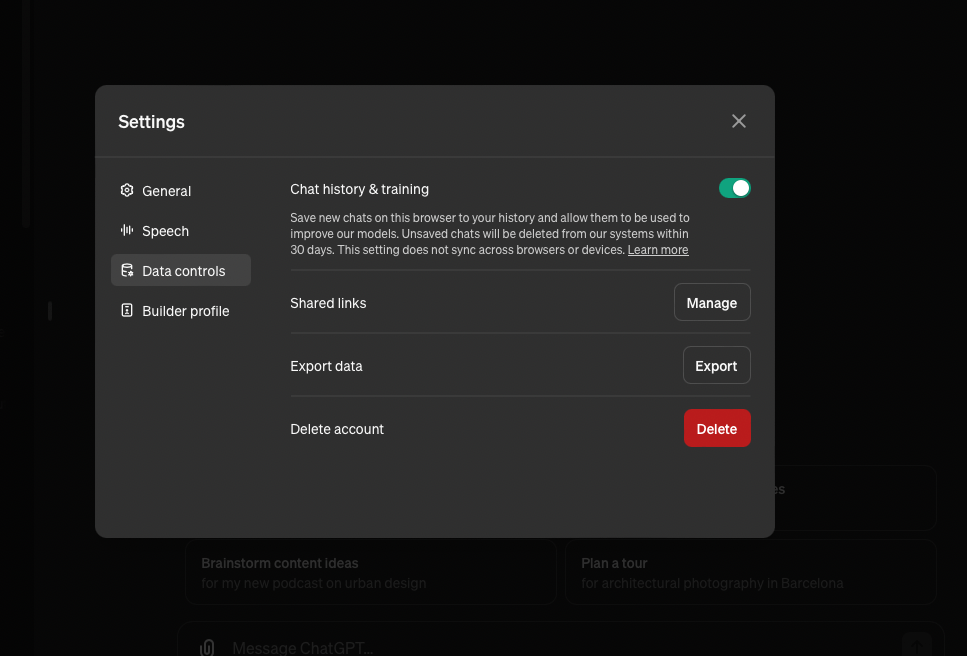

Next, click the data controls tab on the left-hand side.

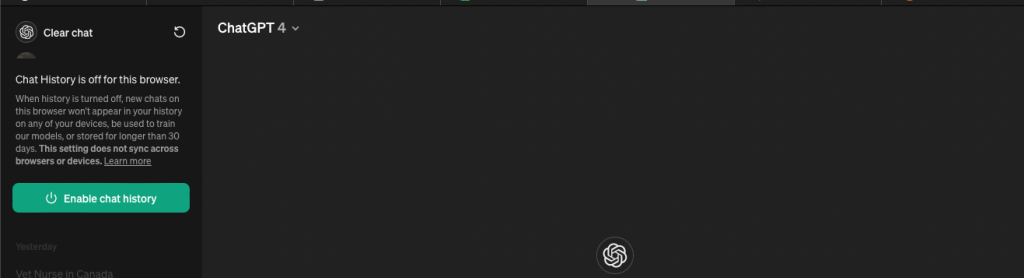

Click the chat history and training button so it is turned off. Your page should now look like the screenshot below.

Google Gemini

If you want to use Gemini Apps without saving your conversations to your Google Account, you can turn off your Gemini Apps Activity. Even when Gemini Apps Activity is off, your conversations will be saved with your account for up to 72 hours. This lets Google provide the service and process any feedback. This activity won’t appear in your Gemini Apps Activity.

Please don’t enter confidential information in your conversations or any data you wouldn’t want a reviewer to see or Google to use to improve our products, services, and machine-learning technologies.

Mircrosoft Copilot:

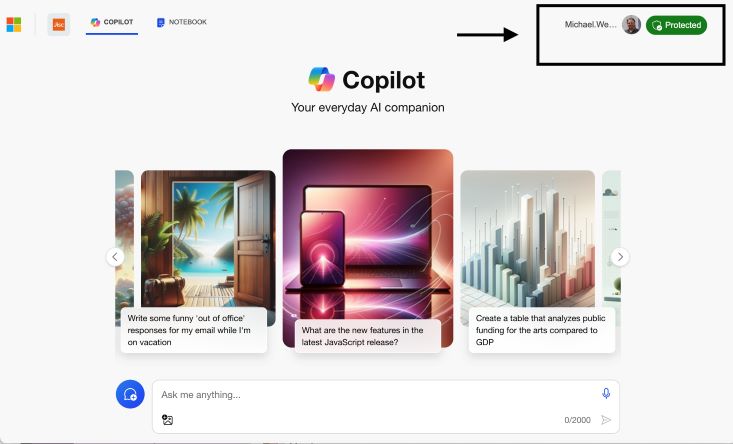

As we have the enterprise version of Copilot your data is secure. You can be sure this is the case if you see this in the top right of copilot. (Note – this depends on your college’s Microsoft license).

5 replies on “Staff Guidance for FE”

I would have expected some guidance on detecting AI misuse and advice/best practice on how to prevent misuse. Also information related to age restrictions and parental consent would be useful. What about IP related to students owning their work and giving consent before it is uploaded to any AI tools by teachers ie for marking, should this be included?

Thanks for your feedback Nicola. We are currently in the process of collating all feedback and will share an updated version of the guidance once this has been done.

Thanks, this is great. From conversations with teaching staff at our college, I think staff would also like guidance on whether they should be crediting/ declaring resources produced using AI tools. Also, more guidance on using AI tools within a class setting would be good. For example, having an awareness of age restrictions. Thanks!

Thanks for your feedback Jacinta. We are currently collating all feedback and will share an updated version of the guidance once this has been done.

Hi James, our admin team are planning to trial AI to streamline minute taking and meeting summaries. We plan to use CoPilot. Many of the MS Teams meetings are sensitive and confidential within our organisation and even down to a more granular stakeholder level. Can the admin team be confident that the contents of the meeting that are fed into the AI cannot be harnessed by other employees who were not invited to the meeting?