In November, as part of a week of engagement for Jisc’s accessibility and assistive technology communities, we held a discussion session to consider current issues around AI use in assessment and the impacts on assistive technology users.

Our aim was to bring the AI, accessibility and assistive technology communities together to hear about current experiences and potential solutions.

Setting the scene

We began the session with a short presentation to look at some of the current challenges. Specifically looking at how approaches to defining acceptable use of AI in assessment may be excluding assistive technology users.

AI has long played a key role in popular assistive technologies, like captioning software, screen readers, grammar and spell checkers. Meanwhile, the development of generative AI tools and features are offering new ways to assist disabled and neurodivergent students. The perspectives of students and learners who have felt supported in their education by AI tools have been shared in student forums, guest blogs and in the news too.

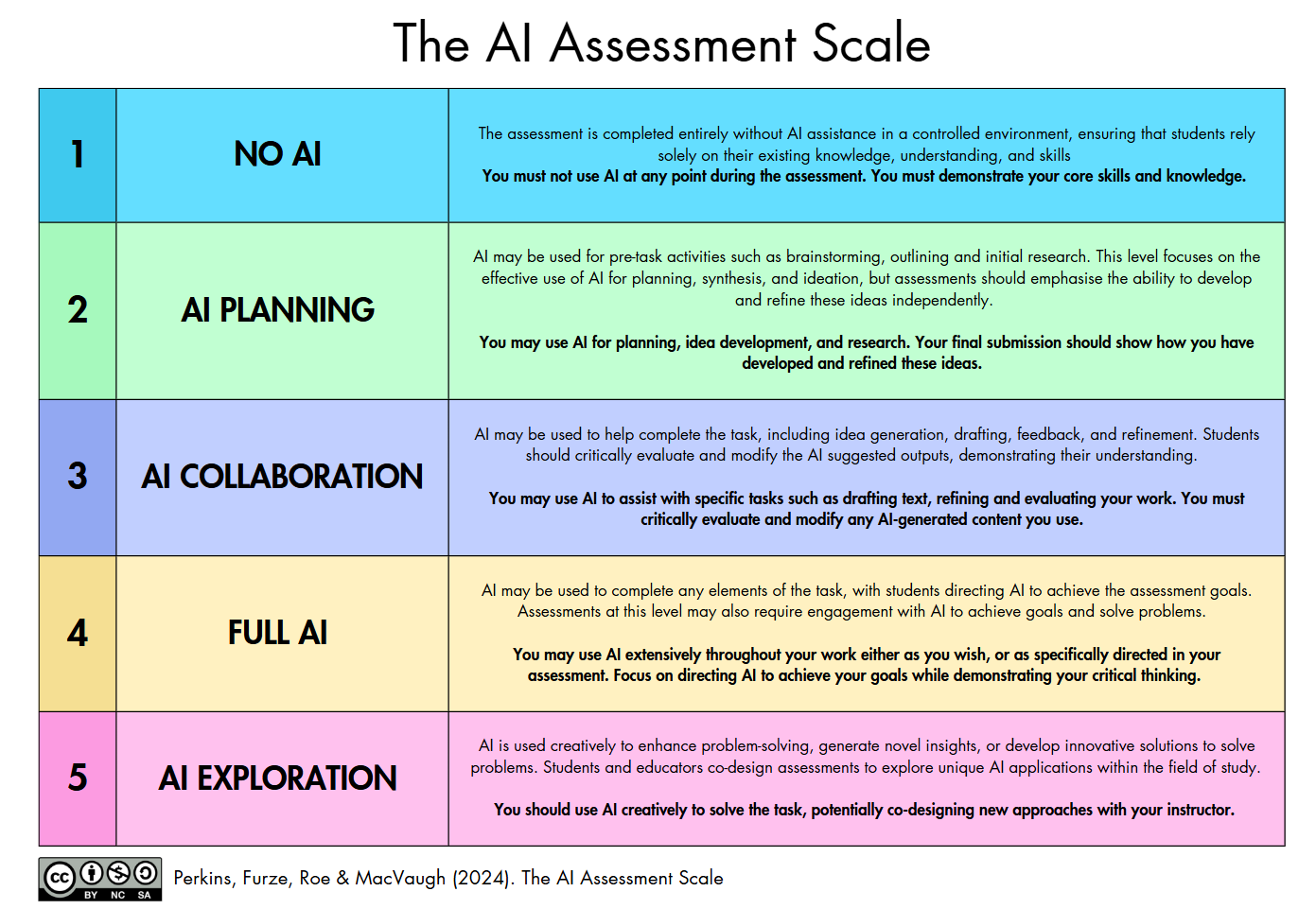

At the same time, there are ongoing efforts to ensure generative AI in particular does not impact academic integrity. Determining what is ‘acceptable use’ of AI tools for assessments continues to present a hugely complex challenge for the sector. We’re seeing many different approaches to help staff determine what level of AI use they want to allow in their assessments. Assessment scales, such as the AI in Assessment (AIAS) Scale pictured below, look to provide a way for staff to determine the appropriate level, and a clear way to communicate what is allowed to students.

In examining different scales and frameworks, we see that frequently they begin at a “No AI” level. There is concern that this lacks consideration for AI’s role in assistive technology, and potentially excludes uses of AI enabled tools that have long been accessible to students even for use in assessment.

Grammarly is emerging as a key tool in this issue. It is widely used by students, including many accessing it through the Disabled Students’ Allowance, and has a long history of use in education. However, since adding generative AI features, it has become harder to determine if students should be able to continue use it during assessments.

Addressing the ‘No AI’ approach and thinking widely

For our discussion attendees considered these issues from two perspectives:

How might we ensure no one is worse off than they were before?

Firstly, that disabled and neurodivergent students may be afforded less access to the same assistive tools that they had before generative AI became available, even if they aren’t using those generative features specifically.

How can we ensure new opportunities aren’t missed?

Secondly, going further to ensure that potential opportunities offered by AI tools, including generative ones, aren’t being missed because students are unable to explore and discover them.

Key areas of discussion

Our discussion was wide-ranging and there were many insightful comments, below are some of the key areas the discussion took us to.

AI vs Generative AI

It was recognised that for most academic integrity concerns it is generative AI tools and features which are seen as the most problematic. However, AI scales and policies tend to refer to AI generally. Some attendees have felt this has contributed to confusion around AI policies and what is/isn’t allowed. For students using assistive technologies, which often combine various types of AI tools, clarifying this can be particularly important. It was noted that where there is uncertainty, both for staff and students, people might tend to err on the side of not using these tools at all for fear of infringing on policy.

Legal risk

There is concern particularly that for students who have access to tools as part of a reasonable adjustment, removing their access not only risks disadvantaging them but also presents a potential legal risk to the institution under the Equality Act.

Tools like Glean, Scholarcy and Grammarly are available based on students’ needs through disabled students’ allowance (DSA). It was noted that this has been confusing for students who are provided these tools but then limited in their use of them.

Providing an exemption

Providing an exemption or an amendment to AI policies for students using AI in, or as, an assistive tool was discussed. An exemption or amendment to that ‘No AI’ level for example might specify that students using AI enabled assistive technology should still have the same access to those tools that they had prior to the policy. For example, students could continue using the grammar and spelling correction from Grammarly but would turn off the newer generative AI features. This might require students to make a declaration of what tools they have used and how they have used it for assistive purposes.

It was noted though that managing exemptions could be complex. There was concern that any process that requires students to disclose their use of an assistive tool could potentially identify them and affect the anonymity of the assessment. There was also concern that requiring students to declare and even evidence their need for a tool could place undue burden which wasn’t required of them before.

Providing alternatives to assistive tools which are now considered to have too much capability, for instance those with generative features, has been suggested too. However, it was noted that it is difficult to find alternatives which offer the same experience, and this could disadvantage the student who is familiar with a particular tool.

AI literacy for staff and students

Improving AI literacy and understanding for both staff and students repeatedly came up as a key aspect needed to help navigate these challenges. There was a concern that the ‘No AI’ option presented in scales and policies could seem the lowest risk choice for staff who haven’t developed a strong understanding of AI tools and are wary of making the wrong choice for their assignment.

This included specifically raising awareness of the role of AI in, and as, assistive technology. That this could help with developing an understanding in staff which would also improve their confidence more generally in making informed choices around AI use in assessment. It was suggested that having an institutional statement on AI use for assistive purposes to accompany AI policies and guidance could also help provide clarity and raise awareness among staff.

Importantly, it was noted too that we can’t assume students will know what to ask for or how to best use AI tools if they do have access to them. Training and support on the use of AI tools specifically for disabled and neurodivergent students was suggested to ensure they have time to both explore the technology and understand its limitations.

Assessment design

Comments and discussion also centred around assessment design itself and how we might build assessments that both consider the impact of generative AI and are as accessible as possible. There has been concern that efforts to mitigate generative AI use in assessments can cause a turn to traditionally less accessible forms of assessment such as in-person and handwritten examinations.

It was noted that for those qualifications where summative assessment formats are determined by examining bodies, it is difficult to make these changes. For formative assessment though there are perhaps more opportunities, but it was acknowledged that these changes will take time and require the development in staff of both AI literacy and accessibility knowledge.

Next steps

Thank you to everyone who took part in the session, the points raised were insightful and showed the breadth and complexity of these ongoing issues. We are keen to continue these discussions and importantly to hear from those in the sector navigating these challenges.

We encourage all interested in this area to join our communities for discussion and updates:

Accessibility and Assistive Technology Communities

From Jisc’s AI team we also have a series of AI literacy resources:

Generative AI in Practice Hub (select ‘Learner Resources’)

AI literacy training for staff recorded sessions

AI literacy training for staff upcoming live sessions (Nov-Dec ‘24)

And resources specifically on AI in assessment:

Embracing Generative AI in Assessments: A Guided Approach

Assessment Menu: Designing assessment in an AI-enabled world

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk