The release of ChatGPT coincided with an environment of rising anxiety around academic integrity brought about by the move to online assessment post COVID. This, at least, was the experience of my colleagues and I in the Digital Assessment Advisory at UCL and seemed to reflect concerns elsewhere.

The result was, and remains, a demand for increased assessment security measures such as in-person invigilated exams. This response can be understood not so much as a resistance to change but that the rapid pace of AI developments requires an agile and flexible response, something that HE regulatory systems often cannot accommodate. Add to that the workload involved in changing assessment and negotiations required both internally and, in many cases externally with Professional Statutory and Regulatory Bodies, it is not surprising that familiarity wins out.

Of course, there is a lot at stake; we have a responsibility to protect the integrity of our degrees and ensure that students achieve awards fairly and on their own merit. The question is whether the fall-back position of traditional security-driven assessment can be any more than a stop gap. As Rose Luckin, an expert in both AI and Education, proposes, GenAI is game changing technology for assessment and education in general.

“[GenAI is] much more than a tool for cheating, and retrograde steps (like going back to stressful in-person exams assessing things that AI can often do for us) not only fail to prepare students to engage with the technology, they miss opportunities to focus on what they really need. “

So, what do students really need from assessment in an AI enabled world? In the view of Luckin (and others) AI will change how and what we teach and learn. The types of capabilities students will need to develop and be able to demonstrate include things like critical thinking, contextual understanding, emotional intelligence and metacognition.

Acknowledging the fact that we can’t ignore GenAI, ban it or outrun it with detection tools (ethically at least), UCL from the outset adopted the only viable option which was to integrate and adapt to AI in an informed, and responsible way. However, taking this position meant supporting staff navigate this ever-changing terrain.

An AI and Education experts’ group was set up in January focussing on four strands: Policy and Ethics, Academic Skills, Assessment design and Opportunities. An output of the group is a newly released Generative AI hub for UCL staff bringing together all the latest information, resources and guidance on using Artificial Intelligence in education, including a three tiered categorisation of AI use in an assessment for staff to guide students on expectations.

As part of the assessment design strand of this group, my contribution was a set of resources called ‘Designing assessment in an AI enabled world’ divided into two parts:

- Small-scale adaptations/enhancements to current assessment practice and which are viable within the existing regulatory framework.

- Planning for larger changes with an assessment menu of suggestions for either integrating AI or for which AI would find it difficult to generate a response.

The inspiration for the Assessment menu was Lydia Arnold’s ‘Top Trumps’ , a wonderful resource consisting of 50 suggestions for diversifying assessment using a star rating system based on Ashford-Rowe, Herrington and Brown’s characteristics of authentic assessment. Lydia generously made this free to edit and adapt for non-commercial purposes.

Although there was a lot of guidance around using AI in education, it was surprisingly hard to find concrete examples of assessment design for an AI enabled world. I thought Top Trumps would be an ideal starting point and set about trying to reconfigure them to either integrate AI or make it more difficult to generate a response using AI. I also drew on material such as the excellent crowdsourced ‘Creative ideas to use AI in education’ resource, Ryan Watkin’s ‘Update your Course Syllabus post’, Lydia Arnold’s recent integrating AI into assessment practices post and added a few myself. A list of contributors can be found here – Assessment Menu Sources. Examples were tweaked accordingly, framed as student activities and broken down into steps.

I wanted to include meaningful indices for UCL staff to help them identify relevant approaches:

- Revised version of the star rating system used in Top Trumps.

- Types of assessment corresponding to in UCL’s Assessment Framework for taught programmes.

- Types of learning that it assesses and develops which references Bloom’s taxonomy, but also the types of capabilities experts envisage as being increasingly important.

- Appropriate formats.

- Where appropriate, whether the activity is suitable for specific disciplinary areas.

My colleague Lene-Marie Kjems helped with visual design and accessibility. We were about to publish our first version when my manager, Marieke Guy, mentioned that a collaborative assessment group at Jisc were also planning an AI version of Lydia Arnold’s Top Trumps. There is no such thing as a new idea as Mark Twain famously said!

The Jisc group – Sue Attewell (Jisc), Pam Birtill (University of Leeds), Eddie Cowling (University of York), Cathy Minett-Smith (UWE), Stephen Webb (University of Portsmouth), Michael Webb (Jisc) and Marieke (UCL) – invited me in to work together on producing an interactive, searchable version of the assessment menu for their website. Having forty options to choose from could be overwhelming so being able to filter according to requirements would make it much more usable. We also invited Lydia Arnold (Harper Adams University) to be a consultant and together we worked to review and refine the cards.

Deciding on the search categories was central to the usefulness of the resource. After some discussion we opted to use the categories of summative assessment included in UCL’s Assessment Framework as the entry point as these are common in UK Higher Education:

- Controlled conditioned exams.

- Take home papers/open book exams.

- Quizzes and In-class tests.

- Practical Exams.

- Dissertation.

- Coursework and other Written Assessments.

Margaret Bearman and others from Deakin in their highly informative CRADLE webinar series on GenAI suggest we have roughly a year to turn things around in education. In this light, it may seem strange to include Controlled conditioned exams but, however much we want (or need) radical transformation, we felt it would be more helpful to meet people where they currently are, which, as mentioned above, often includes the familiar territory of exam-based assessment.

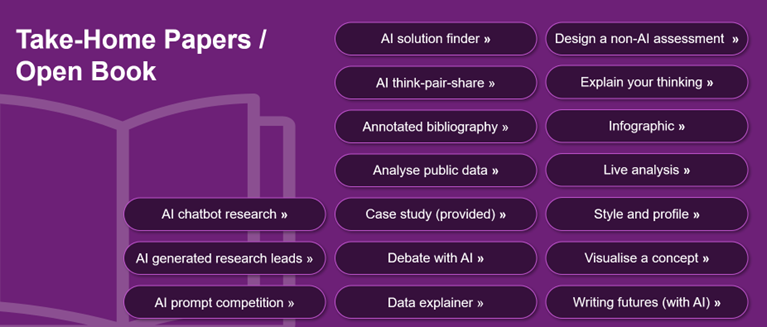

The Jisc design team did a fabulous job of turning the original PPT version into the interactive resource you can now find in the Uses in Learning and Teaching section (scroll down to locate it). Using clickable icons representing each of the above (on slide 7), users can be presented with options to consider.

Image: example search results for Take-Home Papers/Open Book

Like any AI related guidance we produce right now, this will need to be updated as the technology and assessment practice evolve together.

The most frequent questions I am currently asked are ‘How can I make my assessments AI proof?’ and ‘How can I stop students using AI when they’re not supposed to?’ This resource does not promise to solve either of these questions, but the hope is that this genuinely collaborative menu of assessment ideas, drawing on expertise from UK and beyond, will provide inspiration for educators and support them to work alongside students to engage productively and thoughtfully with AI in their assessments.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk