Legacy post: AI is a fast-moving technology and unfortunately this post now contains out of date information. The post is now available just for those that need to reference older articles. We have a more recent post exploring image generation available here.

Introduction

Over the last few months, we have seen huge advances in the ability of AI to create images. As this is such a new development, we are still at the start of understanding the educational use case. Possibilities include the use by educators to create learning resources and the use by students in creative courses or to illustrate course work. It’s also likely to have a big impact on the way creative industries work – we are already starting to see this technology be integrated into mainstream products such as Microsoft Designer.

This blog post is a quick guide on how to start exploring these tools. We’ll have a look at how to try them out, and how to examine the training data behind them, as this is a good way to start understanding how tools like these work.

A quick overview

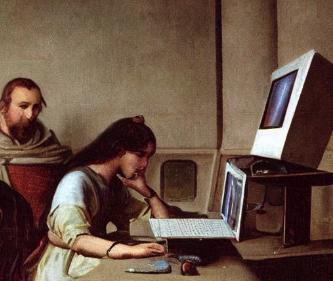

To use an AI image generation tool, you simply give the tool a text prompt, and it then generates one or more images. If you’ve seen these on social media you might well have seen lots of fun examples. Here’s an example:

Prompt: “A cat playing a guitar in the style of Claude Monet”

This isn’t an image that has existed before – it’s been created by the AI model. The underlying AI model has been trained on billions of images scraped from the internet. The detail of how the actual AI model works is outside the scope of this blog post, but it is easy to understand and explore the training data. We’ll talk a bit more about this in the last section of this blog post.

How to try out AI Generation yourself.

We’ll now have a look at three different tools you can use to start exploring AI image generation – a demo on AI community site Hugging Face, Dream Studio and Dalle-2.

Hugging Space’s demo is completely free, whereas the other two are paid services but when you sign up you get a free trial, which allows you to generate several images for free.

Hugging Face’s demo

I’d recommend first trying out Hugging Face’s Stable Diffusion demo, as this is available with no restrictions and no signup process. It is based on Stable Diffusion, which, along with Dalle-2 is one of the main AI models currently gaining attention. You can access a demo here:

https://huggingface.co/spaces/stabilityai/stable-diffusion

(Note: these demos are updated regularly so if this link doesn’t work for, go to https://huggingface.co/spaces and search for ‘stable diffusion’ and pick a recent demo)

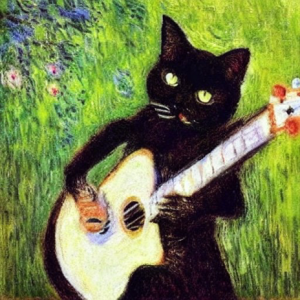

As an experiment first try something that might already exist as an image:

e.g. “A student using a computer in a lecture theatre”

At first glance it might like the images have just been acquired from a search engine such as Google but look closer and you’ll see they aren’t quite accurate – in particular the models don’t render humans perfectly at the moment. These have in fact been generated by AI.

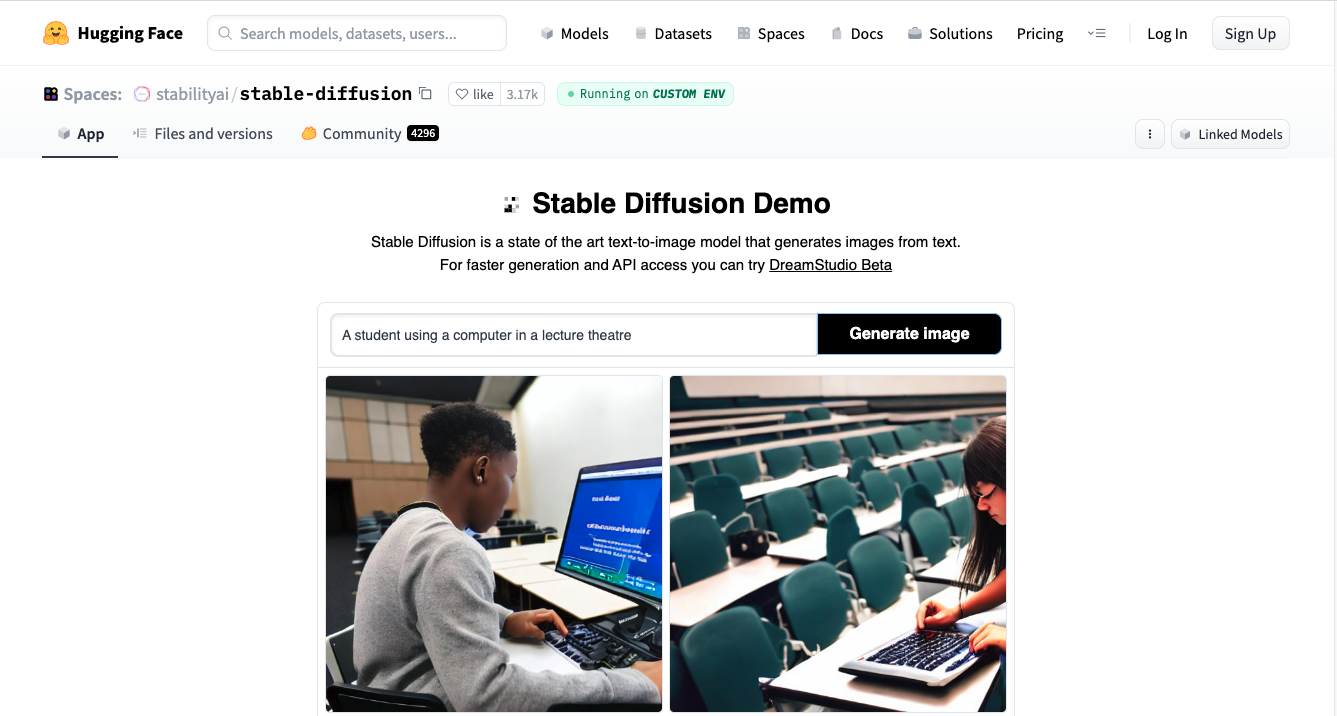

You can then explore this more by suggesting images that almost certainly don’t already exist. One thing to remember is the AI model will produce something different each time it runs. I’ve picked ‘A computer being used in Ancient Britain’ as my choice, as this is something unlikely to already exist.

You might need to run it a few times to get a good result, and some might be very strange! In my results below you can see the right-hand picture is a pretty good imaginary picture, whereas the left-hand one is just odd!

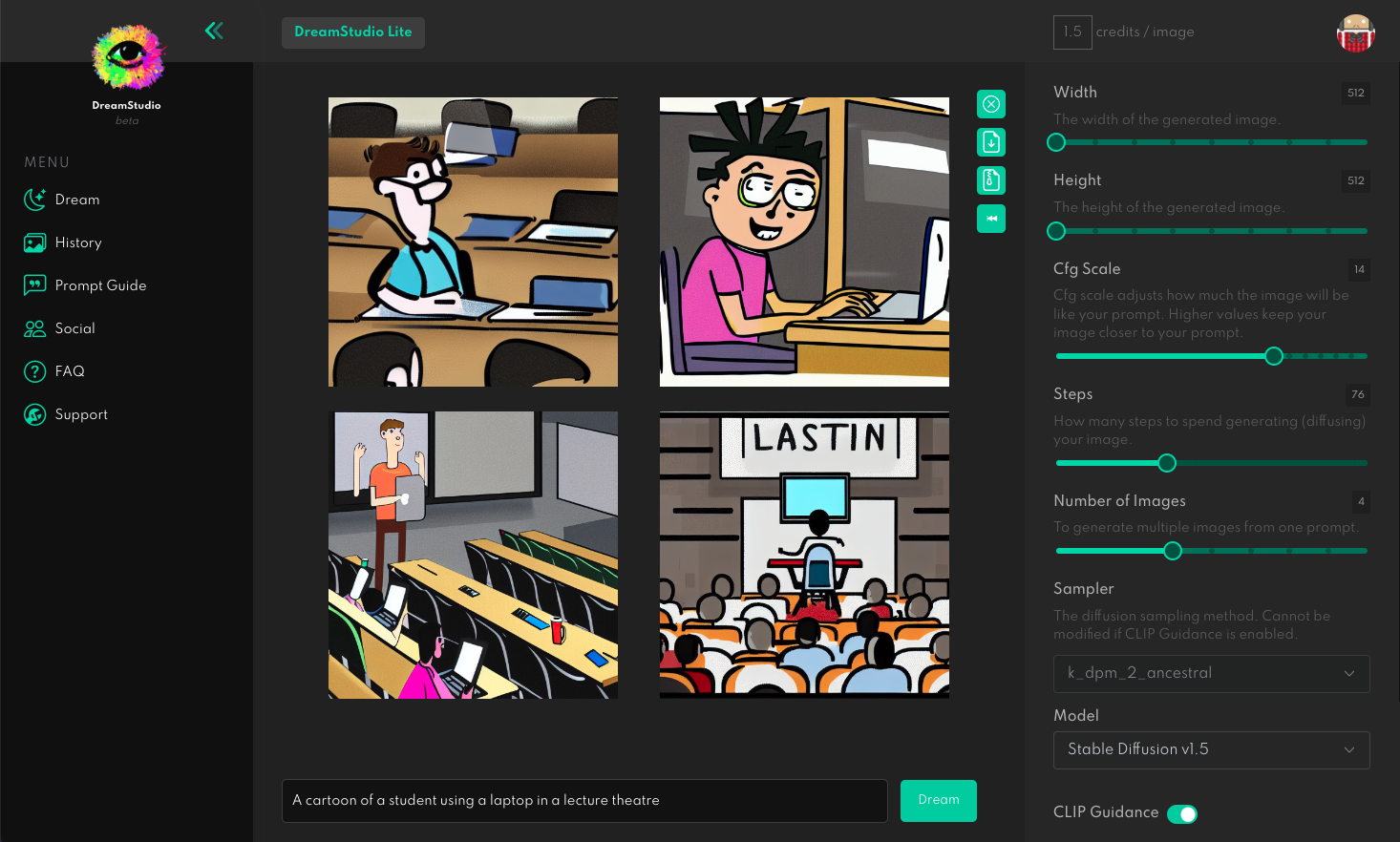

Dream Studio

Dream Studio is accessed at https://beta.dreamstudio.ai/. This is a paid for service, but you get 200 free credits, which should allow you to create 200 images.

Although it uses the same model as the Hugging Face demo, it has a number of parameters that can be tweaked to explore the generation in a little more detail. Try these and see what they do.

The technique you use is the same as for Hugging Face’s demo, so in the example below I’m this time asking it to produce ‘a cartoon image of student using a laptop in a lecture theatre’

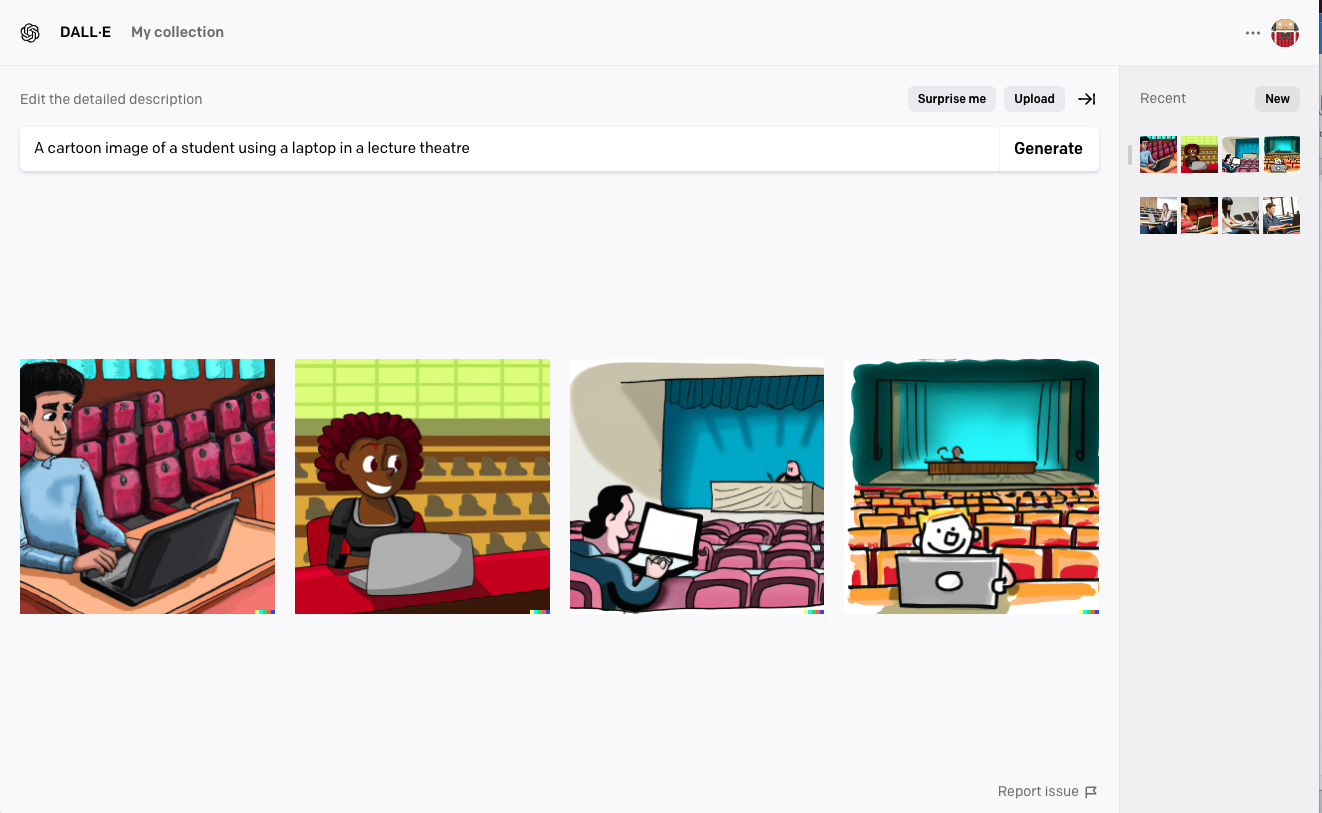

Dalle-2

Dalle 2 can be accessed at https://openai.com/dall-e-2/ , and as with Dream Studio, you get free credits – 50 in this instance. You also get an additional 15 free credits each month, whereas with Dream Studio, once you have used your credits they aren’t replenished unless you pay for more.

You’ll find a user interface that is very similar to the Hugging Face demo – the difference here is that it’s based on the Dalle-2 model created by OpenAI. You might find it interesting to explore the difference in the outputs of the two models but trying the same prompt in both solutions. Here I’ve repeated my ‘cartoon image of students using a laptop in a lecture theatre’ example and the results are very similar.

What else to try?

You may be wondering about tools ability to create images of real people – in effect ‘Deep Fakes’. Initially Dalle-2 prevented this, but it’s no longer the case. So, try out an example, maybe a famous person riding a horse or playing tennis. Currently these images wouldn’t be mistaken for real pictures, but the technology is progressing at an incredible rate, so we’ll need to start considering what this means for society as a whole and closer to home, for staff and students.

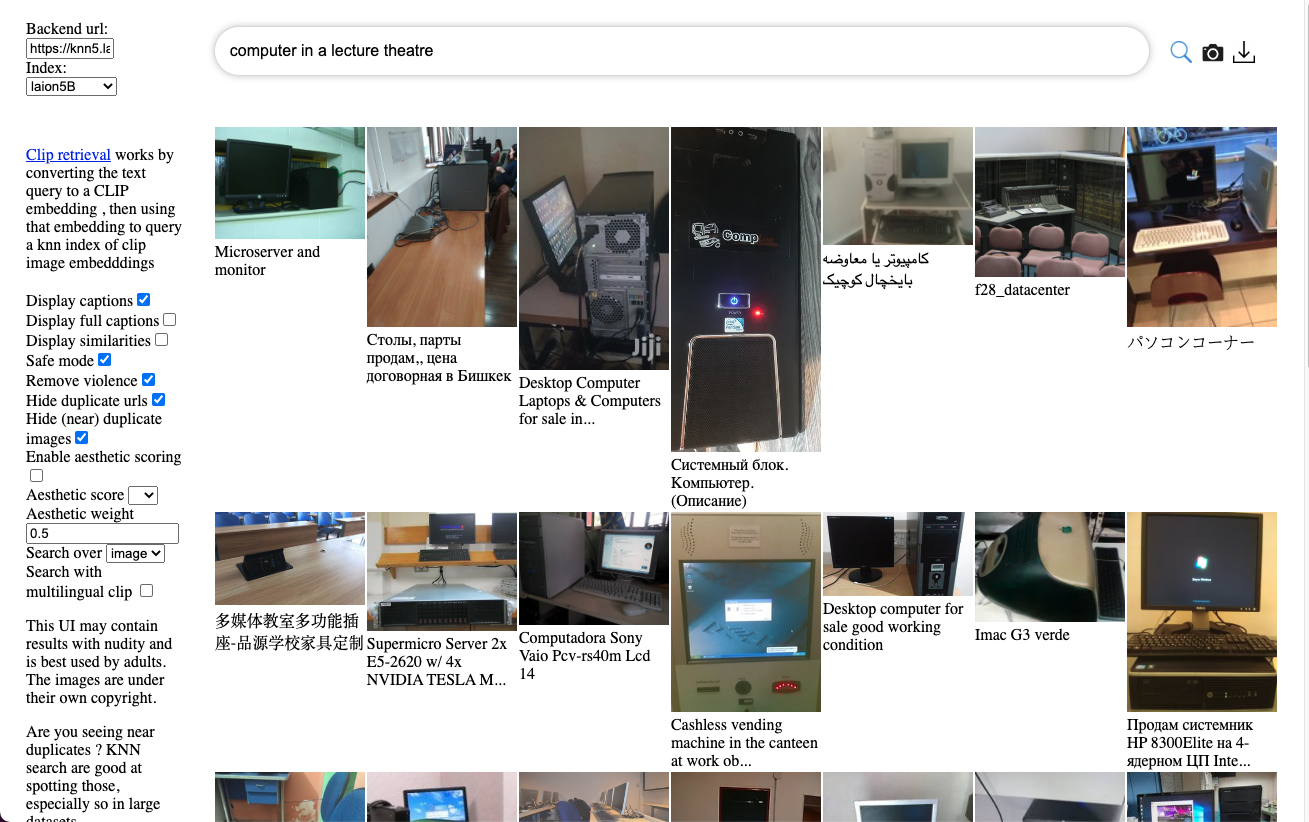

How to explore the training data

As we noted in the introduction, the underlying model has been trained on billions of images scraped from the internet, and we can explore this data ourselves. This will help us understand the way these models work and help us start thinking of the kind of issues it raises around copyright, fair use and ownership.

More specifically, these models have been trained on image-text pairs – i.e. images along with a short text description. One of the main data sets used for this, LAION, has been made open source, and you can explore this yourself. This will help you understand how these images are produced.

You can use a tool to search across all 5.3 billion images in the LAION-5B training set, so you can get a feel for its content. Try this here: https://rom1504.github.io/clip-retrieval/. The user interface of this tool is very basic, but it does allow you to get a sense of the data.

You can search across the data set to explore the images. It’s not a straight text matching search, but instead uses a technique call CLIP – you can read about that here: https://openai.com/blog/clip/. Try searching for a topic you are familiar with – maybe your college or university. Are you surprised or comfortable with the image used? You might well be asking about how this works from a copyright perspective.

We’ll talk more about that in the future, but in the meantime, this article on from CNN gives a flavour of how this is impacting artists.

Summary

If you haven’t used AI image generation tools before, hopefully this guide will have been useful in getting you started. If you have used them, maybe you haven’t explored the training data before, and have found this interesting. These tools are progressing at an incredible rate at the moment. For example, they have quickly moved to video, and to be able to extend existing images, so now is a good time to start exploring them and thinking about how that might impact on your work. If you’ve got any specific areas you would like us to explore then contact us at ai@jisc.ac.uk.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk