Last week we hosted a webinar looking at how artificial intelligence has the potential to disrupt student assessment. In this post we reflect on the event and share some of the ideas generated and questions asked.

The topic certainly seems to be of wide interested, with over 130 attendees.

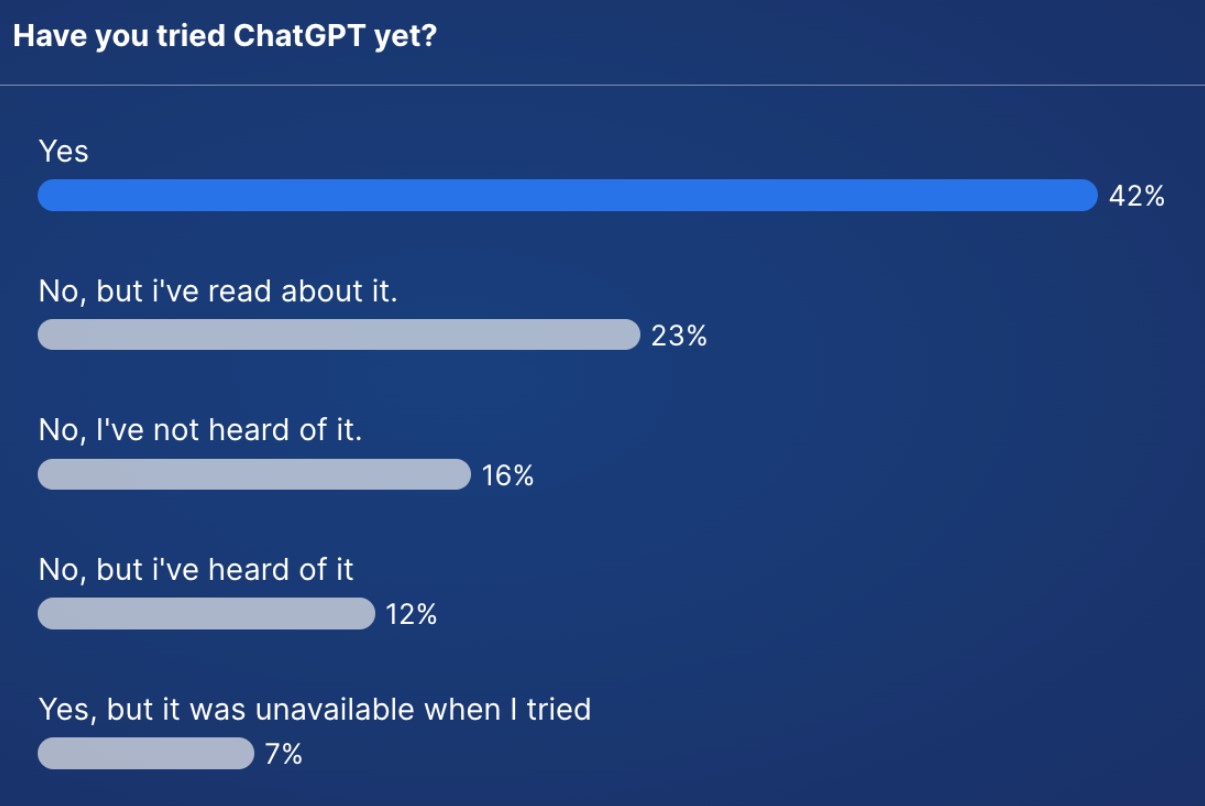

We started with a quick poll to see how many of the attendees had used ChatGPT. Obviously, it’s had a huge amount of coverage, and the attendees were people with an interest in AI, but still the number of people who have tried it is still incredible high! Normally when we present on a very new technology the number of users will be much lower – a reflection of both how interesting and exciting the technology is, and how much mainstream coverage it has got.

A brief overview of the presentation

In the session we started by looking at the underlying concern – that AI is now capable of writing student assignments. We looked at how this was being reported in the media and how tools were being marketed to student.

We then looked at the actual capability of the tools, including how it can almost write essays, but is also prone to writing ‘untruths’. We took a look at the technology behind this and showed why this might be the case.

We ended by reflecting on what we’d seen, and how these could be seen as tools to help us, noting that an arms race between generative AI and plagiarism detection isn’t going to help anyone. We stated we are going to see a huge growth in AI assistive writing, AI driven assistants to help research information, and AI driven tools to help teachers, and need to adapt to make the most of the opportunities that are coming.

A video of the presentation is available on the resources section on the event’s page.

Suggestions on what next

There was, as you might imagine, lots of lively discussion in the chat, and over 50 suggestions put forward on what we might do next.

One core thread that ran through a number of them was that we need reduce panic in our institutions – we do have time to get policy right, but we need to start the process now.

We’ve picked up some of the core themes and given examples of the suggestions that were made under each theme.

Policy

- “Work on policy in this area, articulate to HEIs that there is no need to panic, support us in supporting our institutions”,

- “Short term we need to adapt our policy on the use of tools.”

Sector advice

- “A sector-level statement and advice to institutional senior management”

- “Help us present and explain assistive computation as an opportunity rather than a threat to our institutions and academic colleagues.”,

- “Work with other organisations and professional bodies to calm the alarm”, “I feel like we need some common messaging- mythbusting etc as there is this tendency, mentioned in chat, to knee-jerk to in-person exams as default- can Jisc do that? Offer that guidance? make it sector level.”

Community

- “Attempt to convene a sectoral conversation around this. ChatGPT takes AI out of niche interest and makes more of a common response necessary.”

Advice for academic staff

- “Help us to write comms for academic colleagues on this. Lots of panic out there!”

- “Awareness raising for educators of the tools available”

Advice to students

- ”Advice for students – how to use these tools ethically”

- “Should students be briefed on the use of AI as we do other aspects of unfair means? And should staff be trained to look out for it?”

Assessment

- “Guidance on how to AI-proof assessment questions”

- “How to integrate the existence of these tools into assessment rather than ‘legislate’ against them”.

- “Give advice about ways to change assessment to take advantage of AI and minimise abuse.”

Questions and Answers

We ended with a Q+A session – we’ll share some of the questions asked and our briefly summarise our answers given at the time. We’ll develop much fuller answers to some these going forwards.

Question: Can universities afford to ignore generative AI?

Our answer: No – it’s with us whether we like it or not, and students will start using it, assuming they aren’t already.

Question:Are there universities that have already taken concrete actions with regard to assessment due to these developments? And -in your eyes- are these measures that were taken the right ones?

Our answer: We haven’t found any examples of this, but we’d love to hear if your institution had. There’s been a survey on our aied@jiscmail.ac.uk list to collate this sort of information.

Question: What sort of price is it for students to use these tools compared to more traditional commissioning services? If they’re not already cheaper, is it going to be much longer until they are?

Our answer: At the moment some – chatGPT for example are free for a certain amount of use, but we don’t expect that to continue. Typically these tool are around £10 a month, and we’re concerned that that will create a digital divide between those that can afford access to AI services and those that can’t.

Question: Does it work in Welsh language?

Our answer: I wasn’t actually sure on the call, and said I thought it probably didn’t. I was wrong!

Question: What should institutions do right now to calm panic amongst the teaching community?

Our answer: We’d welcome your thoughts on how we can help with this, but at the moment we think the most important thing is balanced messaging about technology and what it is really capable of at the moment.

Question: It will be interesting to hear your thoughts on the use of text analysis within a broader spectrum of platforms other than LMS’ and assessment tools? Eg. student record systems, campus-student comms, chatbots etc.

Our answer: At the moment we’ve been focusing on AI for teaching and learning, so we haven’t looked at student record systems, campus communications systems, although we have piloted chatbots.

Question:You haven’t covered development of computer code. The accuracy seems to be much higher in this field. Could we use this as a teaching aid for programming courses?

Our answer: We have a lot of software developers in Jisc, so it’s ability to code is of interest to us to from a practical perspective. It seems to work very well, so using it as a tool for programming courses looks like a really good use case.

Question:Is the concept of the “essay” as a primary mode of assessing learners now threatened? Could this push us to more problem-based learning type techniques as assessment?

Our answer: Yes, it certainly changing the concept of the essay, and it’s going to act as a driver to change practice so we have a real opportunity here. This is something we are really keen to work with institutions on.

Next Steps

We ended the webinar with the following calls for action:

- We are interested in what would be most useful to you – so let us know if you have any other ideas not covered above.

- We want to work with staff including lecturers and teaching staff to explore use cases in more detail – let us know if you are interested

- Explore our demos and examples and feedback.

We’ve be reviewing all the ideas over the next week or so, and we’ll be in touch via the jiscmail list aied@jiscmail.ac.uk for next steps

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk