You may have picked up on some of the hype around Google’s Gemini last week, and some of the controversy over the slightly fake video. They also released a 60-page technical report. I thought it might be useful to pick up some of the key facts from the report, and some thoughts about what it might mean for education.

What actually is Gemini?

Gemini is Google’s new generative AI model, aiming to compete with ChatGPT. Most people will probably initially come across it in Google Bard – their ChatGPT/Co-pilot (was called Bing Chat) equivalent.

Google announced three models.

Ultra is targeted at ‘ highly-complex tasks’, and isn’t yet available. It’s not clear where this will be deployed, but it will probably be fairly expenseive to access. It looks to perform slightly better than GPT 4.

Pro is likely to be version that is widely available. It looks to perform slightly worse than GPT4 but better than the original ChatGPT, including the current free version. Google Bard will use this version shortly (in fact, for some people it already is).

Nano is a cut-down model, which can be run, for example, on your phone to do basic tasks like summarisation without sending data to the internet.

It’s trained on video, sound, code and images as well as text, so in theory will be able to do mixed-mode tasks better – there’s a student work example in the next section.

What does it mean for education?

A simple one first: Google Bard is now going to be a better choice than the free version of ChatGPT for most people. So if you are using that, it’s probably time to make the switch, either to Bard or Co-pilot (which was called Bing Chat).

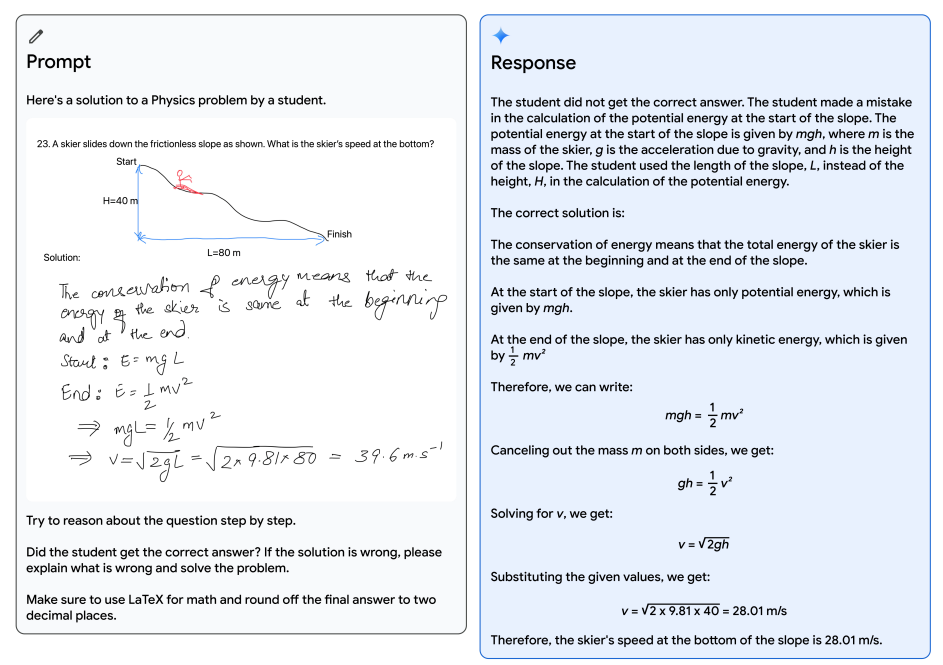

The next thing is that it’s notable how much the report focused on education. Their first example shows how it can use its multimodal capability (eg the image and text) to help mark a student’s work:

Note how well it reads the student’s handwriting, interprets the diagram, and follows the instructions to reason about the student’s response. Google commented that this ‘opens up exciting educational possibilities,’ and it’s easy to see that a tool like this providing early feedback or 24/7 help to a student would be attractive if it was reliable.

In the evaluation report, they focus on academic benchmarks (i.e. standard academic tests often used on these models) and give a detailed table on how it performs compared to others (eg GPT, Claude). The short version is that the Ultra version of Gemini performs slightly better than ChatGPT (GPT-4) on most topics (maths, coding, reading, comprehension). In contrast, the Pro version (the version most people will have access to) performs slightly worse than GPT 4 and better than GPT 3.5. Their conclusion?

“Gemini Ultra’s impressive reasoning and STEM competencies pave the way for advancements in LLMs within the educational domain. The ability to tackle complex mathematical and scientific concepts opens up exciting possibilities for personalized learning and intelligent tutoring systems.”

I think this is probably a fair comment, so we are likely to see some pretty interesting education tools built on top of this emerge over the next year.

Training and training data.

Disappointingly they give almost no detail of the training data. This has been the case with OpenAI too since GPT 4. We did get some detail for GPT3.5.

“Gemini models are trained on a dataset that is both multimodal and multilingual. Our pretraining dataset uses data from web documents, books, and code, and includes image, audio, and video data”

Google give a very broad explanation of the approach to make the data safe, including:

We apply quality filters to all datasets, using both heuristic rules and model-based classifiers. We also perform safety filtering to remove harmful content. We filter our evaluation sets from our training corpus.

So again, very similar to GPT4, including a lack of detail

They also describe how a combination of supervised fine-tuning and reinforcement learning by human feedback is used – i.e. using people to fine-tune the model to make it behave helpfully and safely, but again no detail about who or how.

The paper also gives a broad explanation of how they try to make the model avoid processing harmful content and avoid ‘hallucinations’ (e.g. making things up). Again, there is a disappointing lack of detail here, and nothing that’s really worth drawing attention to.

There’s also a statement that says all data workers are paid at least a local living wage. There’s more detail on this page. Note it says nothing about how exposure to harmful material is dealt with.

I really would like to see regulation and legislation on a global scale that requires providers to give much more transparency on training data and processes. This would help us make much more informed decisions around accessing bias, accuracy, ethical use of content etc, partly as it would allow us to focus testing on areas of concern based on researchers’ analysis of the training.

Conclusion

From a technical perspective, the multimodal capabilities look interesting. From an educational use case point of view, many disciplines may benefit from AI that can work with video, images and sounds just as well as text.

It’s perhaps slightly surprising that the basic model that most of us will have access soon to is going to perform slightly worse than GPT-4, given that GPT-4 is quite a few months old now. There’s speculation that this is an indication that the technology is plateauing, although equally, it could just show how much of a lead OpenAI had. We won’t really know until GPT-5 is released.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk