When ChatGPT was first released, there was (and perhaps still is) some confusion with what it was doing. We mostly got used to the fact that it was, in effect, a text prediction tool, albeit one trained on vast amounts of data, with often quite amazing abilities. This meant that it was prone to making things up (hallucinations). This is useful when using LLMs for creative purposes, but less useful when using it to understand or find factual information. What it wasn’t doing was understanding a question and then searching for the answer.

Things have got a little more complex now, with various other methods appearing, often loosely referred to as ‘grounding’ the model. We’ll dive into one of those approaches, as a basic understanding of what’s happening is a useful part of AI literacy. In this post we’ll look at one of the most common techniques, sometimes confusingly usually just written as RAG. Not Red Amber Green, but Retrieval Augmentation Generation. RAG builds upon and extends the capabilities of LLMs by integrating retrieval.

RAG (Retrieval Augmentation Generation)

In many ways, RAG functions similarly to how many people initially assumed ChatGPT worked. Essentially it works out what you are asking, does a search for the answer, and then composes a nice answer to your questions. Like many generative AI ideas, it’s not new – it can be traced back to a 2020 paper by Meta – ‘Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks’. Its name covers the main stages of the process:

Once the user enters a query this happens:

- Retrieval – The user’s query is used to formulate searches across the information sources, which could be databases, document repositories, web pages etc, to find relevant information.

- Augmentation – The information found is added to the original prompt (or query) from the user.

- Generation – the language ability of the LLM is used to generate a helpful, useful response based on the retrieved information.

RAG and referencing

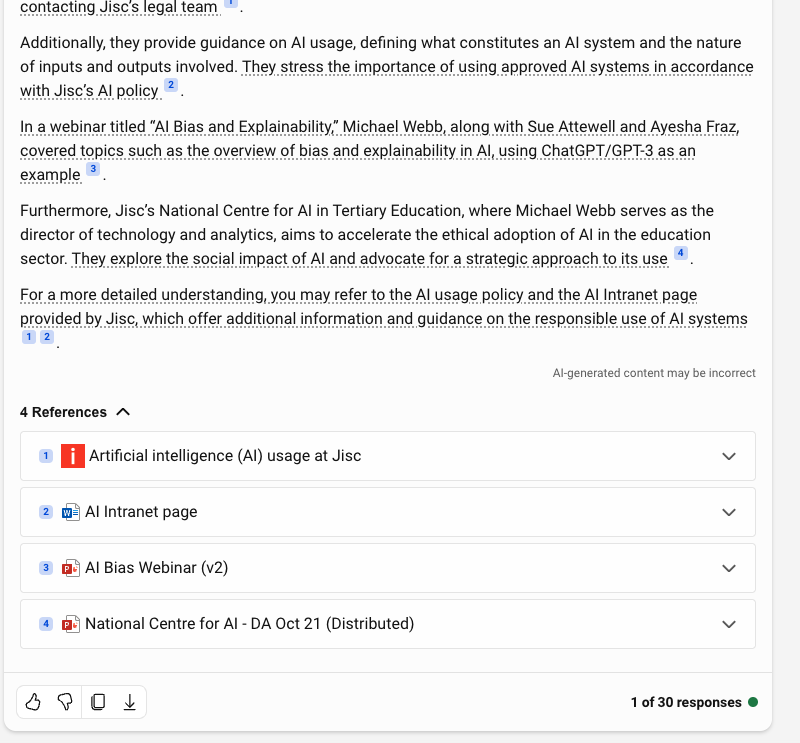

One of the big issues people often raise with straight LLM chatbots is they can’t reference the information they present. This is because they don’t actually know! They aren’t trained directly on the actual text, but instead on data representing the concepts in the text. RAG is different – the systems match the query to specific parts of the document, and so knows where it gets the answer from. This means that it can provide references to the source information, often at a quite detailed level, allowing you to fact check the information.

Some of the technical details

If you read about RAG you’ll probably come across a few terms, including Data Chunking and Vectors or Embeddings. We aren’t going into lots of technical detail here, but here’s a brief summary of some of the main details:

- Data Chunking: The source data is split into manageable chunks, for example, maybe chapters or sentences if it’s a text document. This makes it easier and quicker to search.

- Vectors or Embeddings – The information is transformed into a format that is easy to use by AI systems – mathematical vectors. Don’t worry too much about the detail, but it’s useful to know that the text is encoded in a way the represents the actual meaning of the words and their relationship to other concepts, rather than the words. So the concepts ‘cat’ and ‘bird’ will be closely related as they are both living things, but ‘bird’ will be closely related to ‘aeroplane’ as they can both fly, but cat won’t be.

- Vector database – the encoded data is often held in a special sort of database, called a vector database, which make it easier to do similarity searches on text. In effect it’s searching for similarity of concepts, not words.

Where are we seeing this in real life?

Systems rarely explain or give details on how they work. However, it’s safe to assume that many tools that we are seeing in the education space where you upload or point it at a group of documents or webpages, and can then interact with them through chat, are likely using a RAG type process. The same is true for Microsoft Copilot for 365, built on very similar techniques.

Is this the same as when we upload a file to ChatGPT Plus?

We can upload files to ChatGPT plus and query them, get it to summarise them etc. This is similar to RAG, but not exactly the same – there is no need to search across multiple document sources as in the retrieval stage of RAG as we have provided the document.

What are the limitations?

RAG sounds great, but in practice there are a few limitations. Here are a few that you might come across:

Conflicting information sources

Obviously with any information system, it’s only as good as the data you provide. There are some specific issues with RAG type systems though. Say, for example you have two versions of a policy document (version 1 and version 2). If you do a traditional search, you will see there are two version, and can make a call which is the correct one. RAG systems though, potentially will just combine information from both in its response, providing correct information. They will often try to mitigate this by providing references to the source.

Reference confusion

Here we are are talking about referencing objects in sentences, for example with pronouns, rather than academic referencing. Life would be much easier for AI systems if language was more precise, and, for example, we always used nouns. Think about these two sentences for example:

- When the manager emailed the employee, he was out of the office.

- When the manager emailed the employee, he was expecting a quick response.

Two very similar sentences, but in the first ‘he’ probably means the employee, and in the second, our manager.

The is a hard problem for AI, but our documents are littered with examples like this, meaning, for example, summarised responses are prone to getting references confused and presenting false information.

And good old-fashioned hallucination

We also mustn’t lose sight that the LLM is generating the response. The chances of straight hallucination is much reduced, but still happens, and it will just write false sentences, even though it had access to the correct information, because in the end, it’s still using LLM’s text prediction approach to generate the right answer.

And next?

Techniques such as RAG are almost certainly going to evolve, and tools using AI are going to become more and more useful. We are fairly sure this is going to be by combining LLMs with other techniques, in the same way as RAG does, for example RAFT or Retrieval Augmented Fine Tuning. In the meantime, one of the challenges is to keep training courses, documents and so on up to date, and figure out how to not just get staff and students up to a certain level of AI literacy, but to maintain that. We are also going to have to figure out where to draw the line. We don’t, for example, all need to know how a car works, but understanding some basic concepts sure make life easier and safer.

If you want to explore more, here a couple of technical papers:

- Retrieval Augmented Generation (RAG) in Azure AI Search (Microsoft) – Practical technical information about how to create RAG systems with Microsoft Azure

- Retrieval Augmented Generation (RAG) and Semantic Search for GPTs (OpenAI) – How to add RAG to GPTs

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos.

For regular updates from the team sign up to our mailing list.

Get in touch with the team directly at AI@jisc.ac.uk