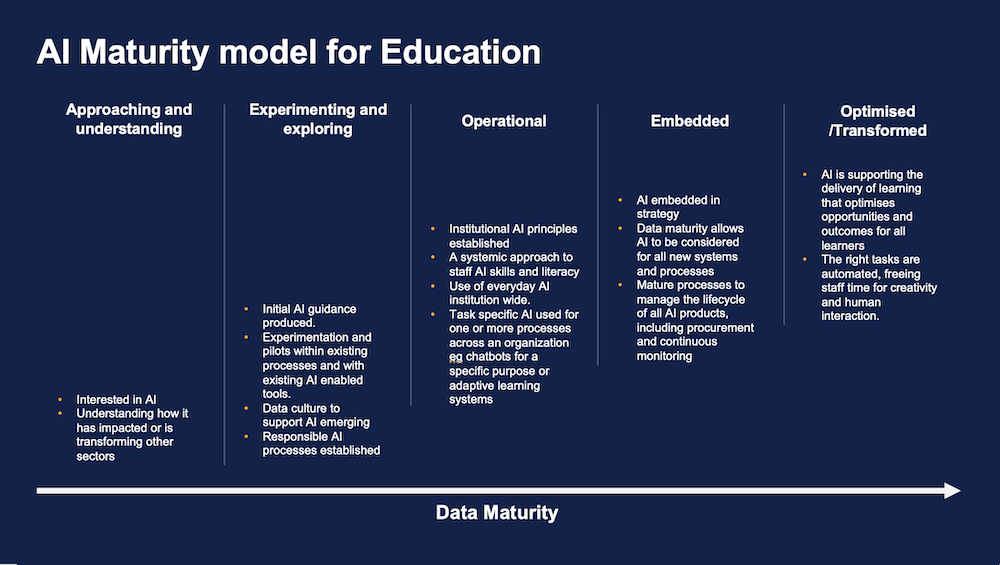

One of first outputs our AI team produced, first released in March 2021, was our ‘AI in education maturity model’ – a tool to frame conversations about institutions journey on adopting AI. The model has proven useful, but obviously an awful lot has happened in the world of AI since then. When we first created the model, for most tasks, if you wanted to use AI, you’d need to start by buying an AI tool. Generative AI has changed that, bringing general purpose AI tools to our everyday tasks. So, for many use cases its now much more about staff skills than IT system procurement. Of course, general AI tools can’t do everything (yet) so there’s still a place for a more transitional procurement process. The model has been updated to reflect this. In this post well give an overview of the updated model and note the main changes from the first version.

Approaching and Understanding

At the ‘approaching and understanding’ stage, institutions will be fully aware that AI is creating many challenges and opportunities and will be at the early stages of considering how to navigate them. They will want to understand what problems it can solve, how it works, and which issues should be considered and acted upon first. This will include investigating how AI is already being used in their sector and how it is transforming other sectors, as well as gaining a basic understanding of AI mechanics. They will also be aware of challenges around issues such as academic integrity, safe and responsible use of AI, and legal issues such as intellectual property rights. They will begin to learn more about these issues. Understanding the views of various stakeholders is important at this stage. What are student attitudes towards AI? What are they using? What do they understand about AI? And what concerns do they have? What are the top concerns of staff in various roles? At this stage, people will also want to understand the broader issues around AI. What are the key ethical issues ? And what wider societal impacts should we consider? For example, the environmental impacts of AI (such as energy usage and carbon footprint) and human impact (for example, who is encoding the data and under what conditions). Changes from previous model – the detail of the activities here has changed a lot, but the basic descriptions remain the same, so there are no changes to the text on the model.

Experimenting and Exploring

At the ‘experimenting and exploring’ stage, institutions will have issued initial guidance to staff and students and will have started to actively experiment with AI in a systematic way. This might be through initiatives that involve using existing AI tools or piloting new ones. Understanding successful implementations of AI systems, even on a small scale, is beneficial at this stage. It’s also important to grasp some of the main practical issues. For instance, how does AI use data? Do we have the data we need for our applications? What data concepts are important to understand and consider in our exploration? For example, understanding how bias occurs and how we can mitigate it is crucial. It is also necessary to explain how the AI system reaches its decisions in a way that both we and the users can comprehend. To enable this, establishing an approach to responsible AI is essential. Typically, this will involve reviewing existing policies and making any necessary changes to enable responsible use of AI. This may include defining approved or recommended AI applications, for example. Changes from previous model – this section has been updated to reflect that everyday AI tools are likely to be the starting point for experimentation:

- “Experimentation and pilots within existing processes” has been updated to “Experimentation and pilots within existing processes and with existing AI-enabled tools.” In the first version of the model, most would have to purchase AI tools to gain access. Now, most institutions will have access to tools like Copilot, and experimentation should begin with these rather than with new products.

- “AI Ethics process established” has been revised to “Responsible AI processes established.” We’ve done this to broaden the scope to include AI Safety, legal aspects of AI, as well as any ethical issues.

Operational

At this stage, institutions will have moved beyond initial guidance to having developed a set of core principles for the use of AI within their institution and will be reviewing and updating any initial guidance to reflect these principles. AI literacy skills now become a priority and there should be a systematic program in place. This should cover both the mechanics of using AI tools, and the skills and knowledge to critically evaluate any outputs from an AI system. The use of everyday AI tools such as Microsoft Copilot or Google Gemini will become commonplace and institution-wide. Organisations at this stage will have moved beyond just working with everyday tools and will be deploying task-specific AI tools to automate or enhance processes. This might be through procuring or enabling AI features in an existing application or through buying and deploying a new tool. At the operational stage, institutions will have moved beyond experimentation to having AI systems live for one or more processes. There are two related core areas that we think institutions will need to understand to make this happen:

- What’s different about an AI project?

- How do we procure AI systems?

In the previous stages, we expect an institution to have gained an understanding of how AI can help and some of the core issues in AI projects. At the heart of this is the question of what is different about AI projects and services. Fundamentally, this is down to the difference between a service built on a standard algorithm, where a human can fully explain exactly how it reaches its conclusion, and one based on machine learning, where, in most cases, a human cannot explain exactly how the model reaches a conclusion. The model will almost certainly be based on some form of training data, and we need to understand what issues arise from this data. For example, is it representative, and does it contain historical biases? Thus, we need new approaches to understanding and validating the performance of the software, ensuring we minimise/remove the risk of bias, and have ways of both assessing these issues at procurement and monitoring them over the lifetime of the system. Our blog post “From Principles to Practice: Taking a whole institution approach to developing your Artificial Intelligence Operational Plan” provides more information and guidance to help institutions moving to this stage. Changes from previous model – this section has changed substantially, as AI is now pervasive rather than limited to just those involved in one specific AI enabled process:

- “Institutional AI principles established” has been added. As AI is now embedded in almost all tools, it is necessary for all institutions at this stage to adopt a set of guiding principles. This is because AI is used across the institution, rather than being limited to a narrow set of applications as was the case in 2021.

- “A systemic approach to staff AI skills and literacy” – Since all staff now have access to AI, they require the skills to use these tools safely, responsibly, and effectively. In the 2021 version of the model, only those involved in using specific AI systems would need training because only they would have access.

- “Use of everyday AI institution-wide” – This was not a concept in 2021, but it is now central to AI use.

- “AI used for one or more processes across an organisation, e.g., chatbots for a specific purpose or adaptive learning systems” has been updated to “Task-specific AI used for one or more processes across an organisation, e.g., chatbots for a specific purpose or adaptive learning systems.” We’ve added ‘task-specific’ to differentiate from general-purpose AI like ChatGPT. Systems like this still play an important role and must be considered.

Embedded

During the early part of the operational stage, AI projects are likely to be seen as special cases. However, as AI becomes more embedded, it will simply become part of the consideration for any digital transformation project, underpinned by an organisation’s strategy. That strategy will clarify whether the aim is to optimise or transform the organisation. We will elaborate on this in the final section. Mature data governance will play a role, as will institution-wide policies and processes. For instance, policies for monitoring AI solutions for performance and integrity will be established at an institutional level, similar to how we see institution-wide security policies today. Changes from the previous model – this section remains very similar, with the change being more about additional reflection on this stage than about changes in the technology landscape.

- ‘Mature processes to manage the lifecycle of all AI products, including procurement and continuous monitoring’ – The inclusion of procurement reflects its importance alongside continuous monitoring. This aspect could also have been included in the original model.

Optimised/Transformational

Most (but not all) educational establishments will likely look to AI to optimise their existing approaches. This might include using AI to improve the flexibility and effectiveness of learning opportunities and remove unnecessarily burdensome processes while still providing students with the same (or very similar) qualifications. Will we see some institutions looking to transform by delivering completely new types of learning and qualifications? At the moment, such transformation will very much be the exception. Whether an organisation is transformed or optimised, we believe there will be two main outcomes. The first is that AI will support the delivery of learning that optimises opportunities and outcomes for all learners. This represents a more nuanced take on the idea of personalised learning. The second outcome is that the right tasks will be automated, freeing staff time for creativity and human interaction. The emphasis here is on the ‘right tasks.’ Institutions should make decisions about what these tasks are for them and which should remain human-led. Changes from previous model – the changes to this section are driven more from the fact that we have had three more years to consider how education is be impacted by AI, than be technical changes:

- The title has changed from ‘Transformational’ to ‘Optimised/Transformed’, reflecting that either of valid aims.

- ‘The student has a fully personalised experience’ becomes ‘AI is supporting the delivery of learning that optimises opportunities and outcomes for all learners’

- ‘The tutor is free from all routine admin tasks to focus on supporting students’ becomes ‘The right tasks are automated, freeing staff time for creativity and human interaction.

Find out more by visiting our Artificial Intelligence page to view publications and resources, join us for events and discover what AI has to offer through our range of interactive online demos. For regular updates from the team sign up to our mailing list. Get in touch with the team directly at AI@jisc.ac.uk